Initial commit

This commit is contained in:

parent

054cbd03c4

commit

b6dbdad80f

|

|

@ -0,0 +1,27 @@

|

||||||

|

name: Django CI

|

||||||

|

|

||||||

|

on:

|

||||||

|

push:

|

||||||

|

branches: [ main ]

|

||||||

|

pull_request:

|

||||||

|

branches: [ main ]

|

||||||

|

|

||||||

|

jobs:

|

||||||

|

test:

|

||||||

|

runs-on: ubuntu-latest

|

||||||

|

steps:

|

||||||

|

- uses: actions/checkout@v2

|

||||||

|

- name: Set up Python

|

||||||

|

uses: actions/setup-python@v2

|

||||||

|

with:

|

||||||

|

python-version: 3.7

|

||||||

|

- name: Install Dependencies

|

||||||

|

run: |

|

||||||

|

python -m pip install --upgrade pip

|

||||||

|

pip install -r requirements.txt

|

||||||

|

- name: Run Tests

|

||||||

|

env:

|

||||||

|

DJANGO_SETTINGS_MODULE: academic_graph.settings.test

|

||||||

|

SECRET_KEY: ${{ secrets.DJANGO_SECRET_KEY }}

|

||||||

|

run: |

|

||||||

|

python manage.py test --noinput

|

||||||

|

|

@ -0,0 +1,18 @@

|

||||||

|

# PyCharm

|

||||||

|

/.idea/

|

||||||

|

|

||||||

|

# Python

|

||||||

|

__pycache__/

|

||||||

|

|

||||||

|

# 数据集

|

||||||

|

/data/

|

||||||

|

|

||||||

|

# 保存的模型

|

||||||

|

/model/

|

||||||

|

|

||||||

|

# 日志输出目录

|

||||||

|

/output/

|

||||||

|

|

||||||

|

# Django

|

||||||

|

/static/

|

||||||

|

/.mylogin.cnf

|

||||||

411

README.md

411

README.md

|

|

@ -1,3 +1,410 @@

|

||||||

# GNNRecom

|

# 基于图神经网络的异构图表示学习和推荐算法研究

|

||||||

|

|

||||||

毕业设计:基于图神经网络的异构图表示学习和推荐算法研究。包含基于对比学习的关系感知异构图神经网络(Relation-aware Heterogeneous Graph Neural Network with Contrastive Learning, RHCO)、基于图神经网络的学术推荐算法(Graph Neural Network based Academic Recommendation Algorithm, GARec),详细设计见md文件。

|

## 目录结构

|

||||||

|

|

||||||

|

```

|

||||||

|

GNN-Recommendation/

|

||||||

|

gnnrec/ 算法模块顶级包

|

||||||

|

hge/ 异构图表示学习模块

|

||||||

|

kgrec/ 基于图神经网络的推荐算法模块

|

||||||

|

data/ 数据集目录(已添加.gitignore)

|

||||||

|

model/ 模型保存目录(已添加.gitignore)

|

||||||

|

img/ 图片目录

|

||||||

|

academic_graph/ Django项目模块

|

||||||

|

rank/ Django应用

|

||||||

|

manage.py Django管理脚本

|

||||||

|

```

|

||||||

|

|

||||||

|

## 安装依赖

|

||||||

|

|

||||||

|

Python 3.7

|

||||||

|

|

||||||

|

### CUDA 11.0

|

||||||

|

|

||||||

|

```shell

|

||||||

|

pip install -r requirements_cuda.txt

|

||||||

|

```

|

||||||

|

|

||||||

|

### CPU

|

||||||

|

|

||||||

|

```shell

|

||||||

|

pip install -r requirements.txt

|

||||||

|

```

|

||||||

|

|

||||||

|

## 异构图表示学习(附录)

|

||||||

|

|

||||||

|

基于对比学习的关系感知异构图神经网络(Relation-aware Heterogeneous Graph Neural Network with Contrastive Learning, RHCO)

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

### 实验

|

||||||

|

|

||||||

|

见 [readme](gnnrec/hge/readme.md)

|

||||||

|

|

||||||

|

## 基于图神经网络的推荐算法(附录)

|

||||||

|

|

||||||

|

基于图神经网络的学术推荐算法(Graph Neural Network based Academic Recommendation Algorithm, GARec)

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

### 实验

|

||||||

|

|

||||||

|

见 [readme](gnnrec/kgrec/readme.md)

|

||||||

|

|

||||||

|

## Django 配置

|

||||||

|

|

||||||

|

### MySQL 数据库配置

|

||||||

|

|

||||||

|

1. 创建数据库及用户

|

||||||

|

|

||||||

|

```sql

|

||||||

|

CREATE DATABASE academic_graph CHARACTER SET utf8mb4;

|

||||||

|

CREATE USER 'academic_graph'@'%' IDENTIFIED BY 'password';

|

||||||

|

GRANT ALL ON academic_graph.* TO 'academic_graph'@'%';

|

||||||

|

```

|

||||||

|

|

||||||

|

2. 在根目录下创建文件.mylogin.cnf

|

||||||

|

|

||||||

|

```ini

|

||||||

|

[client]

|

||||||

|

host = x.x.x.x

|

||||||

|

port = 3306

|

||||||

|

user = username

|

||||||

|

password = password

|

||||||

|

database = database

|

||||||

|

default-character-set = utf8mb4

|

||||||

|

```

|

||||||

|

|

||||||

|

3. 创建数据库表

|

||||||

|

|

||||||

|

```shell

|

||||||

|

python manage.py makemigrations --settings=academic_graph.settings.prod rank

|

||||||

|

python manage.py migrate --settings=academic_graph.settings.prod

|

||||||

|

```

|

||||||

|

|

||||||

|

4. 导入 oag-cs 数据集

|

||||||

|

|

||||||

|

```shell

|

||||||

|

python manage.py loadoagcs --settings=academic_graph.settings.prod

|

||||||

|

```

|

||||||

|

|

||||||

|

注:由于导入一次时间很长(约 9 小时),为了避免中途发生错误,可以先用 data/oag/test 中的测试数据调试一下

|

||||||

|

|

||||||

|

### 拷贝静态文件

|

||||||

|

|

||||||

|

```shell

|

||||||

|

python manage.py collectstatic --settings=academic_graph.settings.prod

|

||||||

|

```

|

||||||

|

|

||||||

|

### 启动 Web 服务器

|

||||||

|

|

||||||

|

```shell

|

||||||

|

export SECRET_KEY=xxx

|

||||||

|

python manage.py runserver --settings=academic_graph.settings.prod 0.0.0.0:8000

|

||||||

|

```

|

||||||

|

|

||||||

|

### 系统截图

|

||||||

|

|

||||||

|

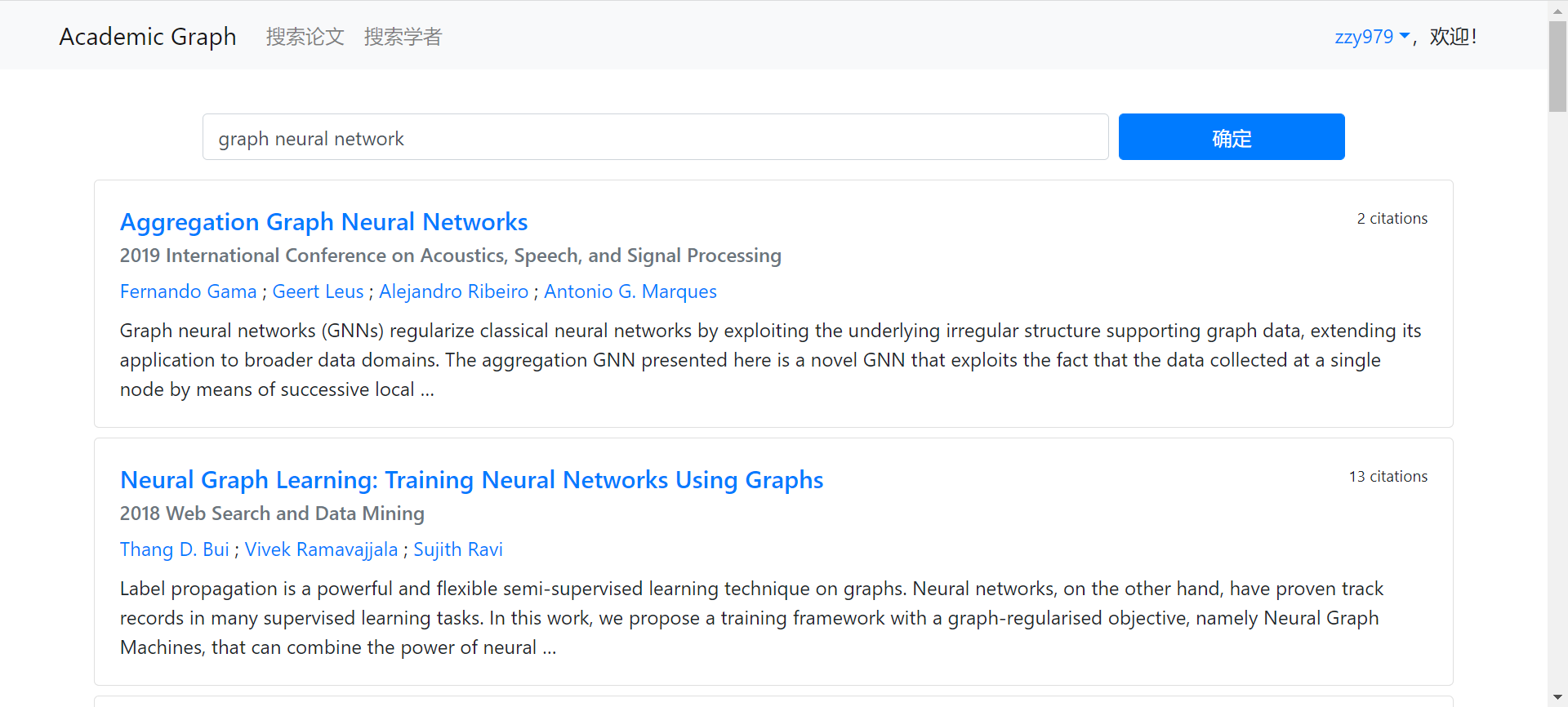

搜索论文

|

||||||

|

|

||||||

|

|

||||||

|

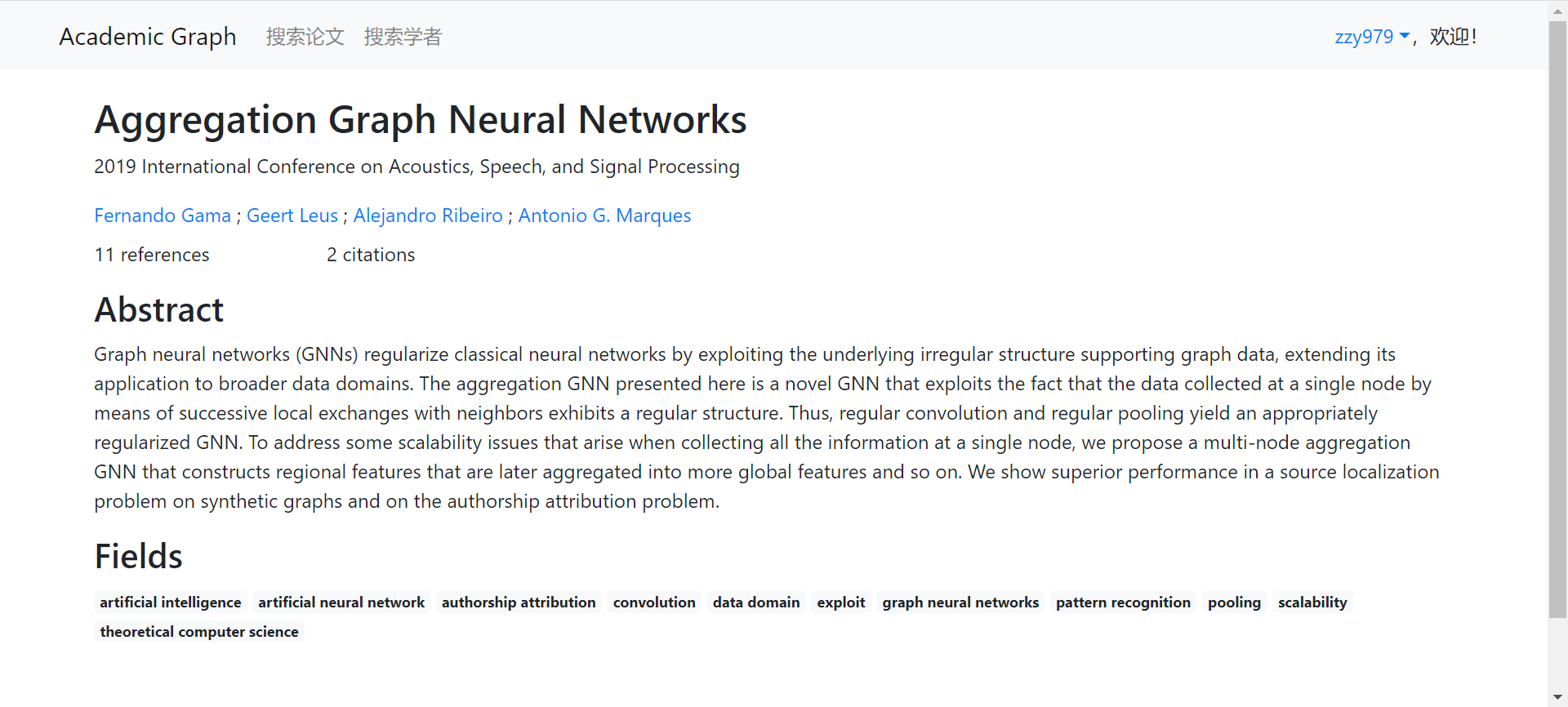

论文详情

|

||||||

|

|

||||||

|

|

||||||

|

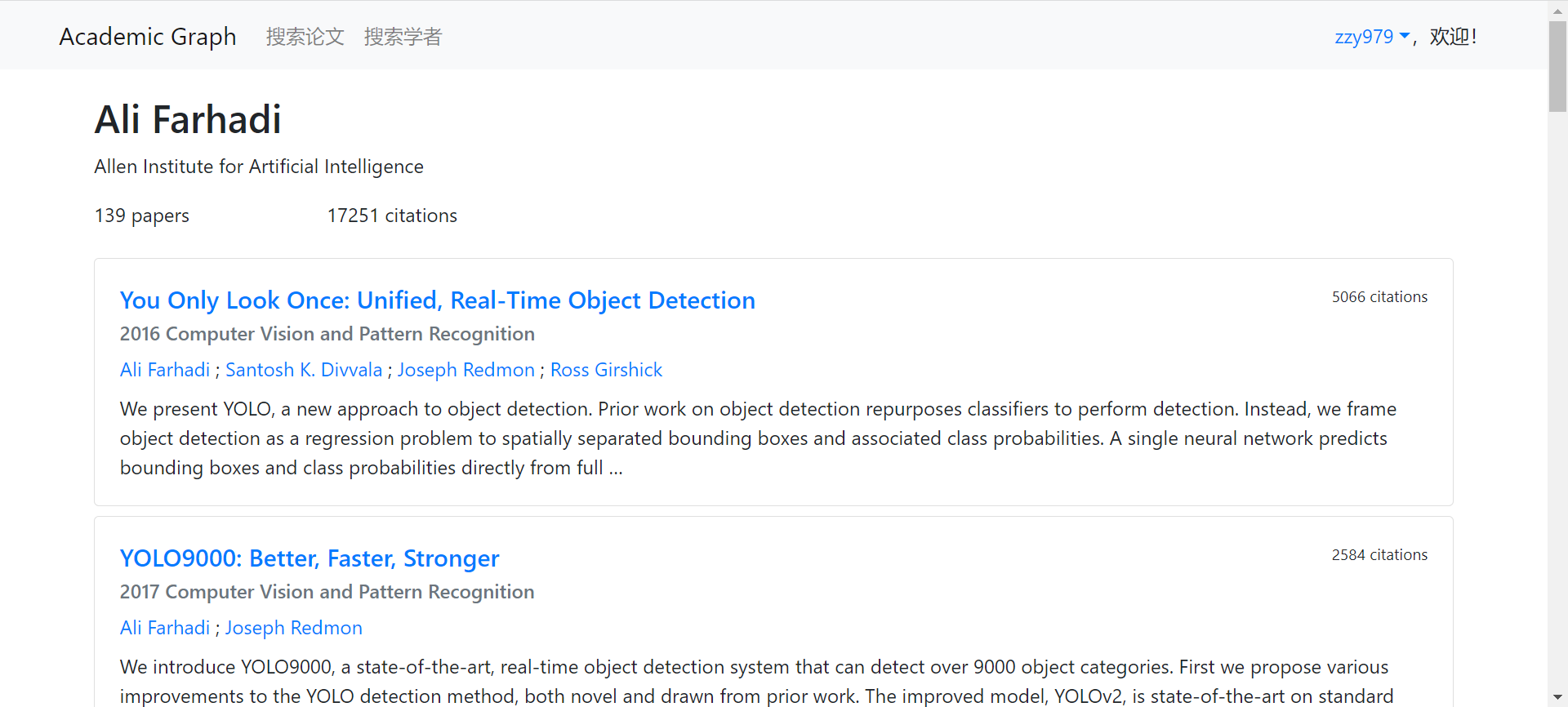

搜索学者

|

||||||

|

|

||||||

|

|

||||||

|

学者详情

|

||||||

|

|

||||||

|

|

||||||

|

## 附录

|

||||||

|

|

||||||

|

### 基于图神经网络的推荐算法

|

||||||

|

|

||||||

|

#### 数据集

|

||||||

|

|

||||||

|

oag-cs - 使用 OAG 微软学术数据构造的计算机领域的学术网络(见 [readme](data/readme.md))

|

||||||

|

|

||||||

|

#### 预训练顶点嵌入

|

||||||

|

|

||||||

|

使用 metapath2vec(随机游走 +word2vec)预训练顶点嵌入,作为 GNN 模型的顶点输入特征

|

||||||

|

|

||||||

|

1. 随机游走

|

||||||

|

|

||||||

|

```shell

|

||||||

|

python -m gnnrec.kgrec.random_walk model/word2vec/oag_cs_corpus.txt

|

||||||

|

```

|

||||||

|

|

||||||

|

2. 训练词向量

|

||||||

|

|

||||||

|

```shell

|

||||||

|

python -m gnnrec.hge.metapath2vec.train_word2vec --size=128 --workers=8 model/word2vec/oag_cs_corpus.txt model/word2vec/oag_cs.model

|

||||||

|

```

|

||||||

|

|

||||||

|

#### 召回

|

||||||

|

|

||||||

|

使用微调后的 SciBERT 模型(见 [readme](data/readme.md) 第 2 步)将查询词编码为向量,与预先计算好的论文标题向量计算余弦相似度,取 top k

|

||||||

|

|

||||||

|

```shell

|

||||||

|

python -m gnnrec.kgrec.recall

|

||||||

|

```

|

||||||

|

|

||||||

|

召回结果示例:

|

||||||

|

|

||||||

|

graph neural network

|

||||||

|

|

||||||

|

```

|

||||||

|

0.9629 Aggregation Graph Neural Networks

|

||||||

|

0.9579 Neural Graph Learning: Training Neural Networks Using Graphs

|

||||||

|

0.9556 Heterogeneous Graph Neural Network

|

||||||

|

0.9552 Neural Graph Machines: Learning Neural Networks Using Graphs

|

||||||

|

0.9490 On the choice of graph neural network architectures

|

||||||

|

0.9474 Measuring and Improving the Use of Graph Information in Graph Neural Networks

|

||||||

|

0.9362 Challenging the generalization capabilities of Graph Neural Networks for network modeling

|

||||||

|

0.9295 Strategies for Pre-training Graph Neural Networks

|

||||||

|

0.9142 Supervised Neural Network Models for Processing Graphs

|

||||||

|

0.9112 Geometrically Principled Connections in Graph Neural Networks

|

||||||

|

```

|

||||||

|

|

||||||

|

recommendation algorithm based on knowledge graph

|

||||||

|

|

||||||

|

```

|

||||||

|

0.9172 Research on Video Recommendation Algorithm Based on Knowledge Reasoning of Knowledge Graph

|

||||||

|

0.8972 An Improved Recommendation Algorithm in Knowledge Network

|

||||||

|

0.8558 A personalized recommendation algorithm based on interest graph

|

||||||

|

0.8431 An Improved Recommendation Algorithm Based on Graph Model

|

||||||

|

0.8334 The Research of Recommendation Algorithm based on Complete Tripartite Graph Model

|

||||||

|

0.8220 Recommendation Algorithm based on Link Prediction and Domain Knowledge in Retail Transactions

|

||||||

|

0.8167 Recommendation Algorithm Based on Graph-Model Considering User Background Information

|

||||||

|

0.8034 A Tripartite Graph Recommendation Algorithm Based on Item Information and User Preference

|

||||||

|

0.7774 Improvement of TF-IDF Algorithm Based on Knowledge Graph

|

||||||

|

0.7770 Graph Searching Algorithms for Semantic-Social Recommendation

|

||||||

|

```

|

||||||

|

|

||||||

|

scholar disambiguation

|

||||||

|

|

||||||

|

```

|

||||||

|

0.9690 Scholar search-oriented author disambiguation

|

||||||

|

0.9040 Author name disambiguation in scientific collaboration and mobility cases

|

||||||

|

0.8901 Exploring author name disambiguation on PubMed-scale

|

||||||

|

0.8852 Author Name Disambiguation in Heterogeneous Academic Networks

|

||||||

|

0.8797 KDD Cup 2013: author disambiguation

|

||||||

|

0.8796 A survey of author name disambiguation techniques: 2010–2016

|

||||||

|

0.8721 Who is Who: Name Disambiguation in Large-Scale Scientific Literature

|

||||||

|

0.8660 Use of ResearchGate and Google CSE for author name disambiguation

|

||||||

|

0.8643 Automatic Methods for Disambiguating Author Names in Bibliographic Data Repositories

|

||||||

|

0.8641 A brief survey of automatic methods for author name disambiguation

|

||||||

|

```

|

||||||

|

|

||||||

|

### 精排

|

||||||

|

|

||||||

|

#### 构造 ground truth

|

||||||

|

|

||||||

|

(1)验证集

|

||||||

|

|

||||||

|

从 AMiner 发布的 [AI 2000 人工智能全球最具影响力学者榜单](https://www.aminer.cn/ai2000) 抓取人工智能 20 个子领域的 top 100 学者

|

||||||

|

|

||||||

|

```shell

|

||||||

|

pip install scrapy>=2.3.0

|

||||||

|

cd gnnrec/kgrec/data/preprocess

|

||||||

|

scrapy runspider ai2000_crawler.py -a save_path=/home/zzy/GNN-Recommendation/data/rank/ai2000.json

|

||||||

|

```

|

||||||

|

|

||||||

|

与 oag-cs 数据集的学者匹配,并人工确认一些排名较高但未匹配上的学者,作为学者排名 ground truth 验证集

|

||||||

|

|

||||||

|

```shell

|

||||||

|

export DJANGO_SETTINGS_MODULE=academic_graph.settings.common

|

||||||

|

export SECRET_KEY=xxx

|

||||||

|

python -m gnnrec.kgrec.data.preprocess.build_author_rank build-val

|

||||||

|

```

|

||||||

|

|

||||||

|

(2)训练集

|

||||||

|

|

||||||

|

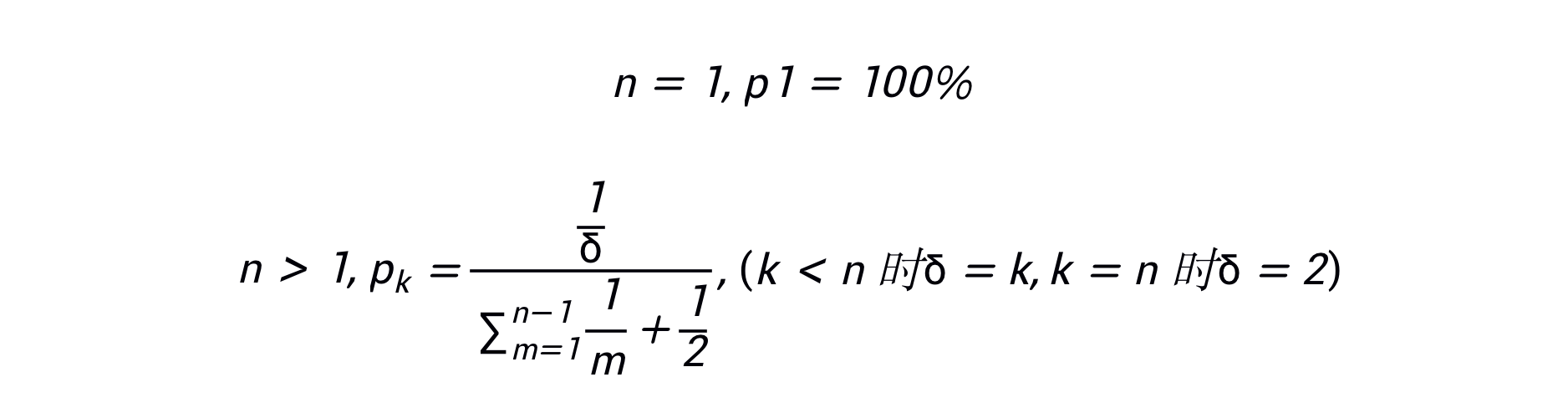

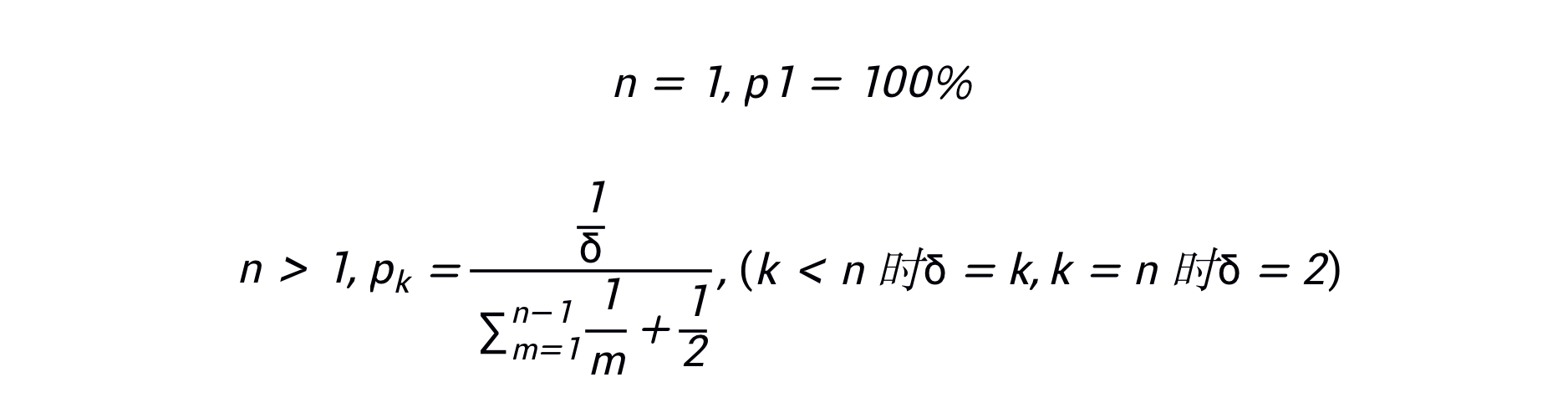

参考 AI 2000 的计算公式,根据某个领域的论文引用数加权求和构造学者排名,作为 ground truth 训练集

|

||||||

|

|

||||||

|

计算公式:

|

||||||

|

|

||||||

|

即:假设一篇论文有 n 个作者,第 k 作者的权重为 1/k,最后一个视为通讯作者,权重为 1/2,归一化之后计算论文引用数的加权求和

|

||||||

|

|

||||||

|

```shell

|

||||||

|

python -m gnnrec.kgrec.data.preprocess.build_author_rank build-train

|

||||||

|

```

|

||||||

|

|

||||||

|

(3)评估 ground truth 训练集的质量

|

||||||

|

|

||||||

|

```shell

|

||||||

|

python -m gnnrec.kgrec.data.preprocess.build_author_rank eval

|

||||||

|

```

|

||||||

|

|

||||||

|

```

|

||||||

|

nDGC@100=0.2420 Precision@100=0.1859 Recall@100=0.2016

|

||||||

|

nDGC@50=0.2308 Precision@50=0.2494 Recall@50=0.1351

|

||||||

|

nDGC@20=0.2492 Precision@20=0.3118 Recall@20=0.0678

|

||||||

|

nDGC@10=0.2743 Precision@10=0.3471 Recall@10=0.0376

|

||||||

|

nDGC@5=0.3165 Precision@5=0.3765 Recall@5=0.0203

|

||||||

|

```

|

||||||

|

|

||||||

|

(4)采样三元组

|

||||||

|

|

||||||

|

从学者排名训练集中采样三元组(t, ap, an),表示对于领域 t,学者 ap 的排名在 an 之前

|

||||||

|

|

||||||

|

```shell

|

||||||

|

python -m gnnrec.kgrec.data.preprocess.build_author_rank sample

|

||||||

|

```

|

||||||

|

|

||||||

|

#### 训练 GNN 模型

|

||||||

|

|

||||||

|

```shell

|

||||||

|

python -m gnnrec.kgrec.train model/word2vec/oag-cs.model model/garec_gnn.pt data/rank/author_embed.pt

|

||||||

|

```

|

||||||

|

|

||||||

|

## 异构图表示学习

|

||||||

|

|

||||||

|

### 数据集

|

||||||

|

|

||||||

|

* [ACM](https://github.com/liun-online/HeCo/tree/main/data/acm) - ACM 学术网络数据集

|

||||||

|

* [DBLP](https://github.com/liun-online/HeCo/tree/main/data/dblp) - DBLP 学术网络数据集

|

||||||

|

* [ogbn-mag](https://ogb.stanford.edu/docs/nodeprop/#ogbn-mag) - OGB 提供的微软学术数据集

|

||||||

|

* [oag-venue](../kgrec/data/venue.py) - oag-cs 期刊分类数据集

|

||||||

|

|

||||||

|

| 数据集 | 顶点数 | 边数 | 目标顶点 | 类别数 |

|

||||||

|

| --------- | ------- | -------- | -------- | ------ |

|

||||||

|

| ACM | 11246 | 34852 | paper | 3 |

|

||||||

|

| DBLP | 26128 | 239566 | author | 4 |

|

||||||

|

| ogbn-mag | 1939743 | 21111007 | paper | 349 |

|

||||||

|

| oag-venue | 4235169 | 34520417 | paper | 360 |

|

||||||

|

|

||||||

|

### Baselines

|

||||||

|

|

||||||

|

* [R-GCN](https://arxiv.org/pdf/1703.06103)

|

||||||

|

* [HGT](https://arxiv.org/pdf/2003.01332)

|

||||||

|

* [HGConv](https://arxiv.org/pdf/2012.14722)

|

||||||

|

* [R-HGNN](https://arxiv.org/pdf/2105.11122)

|

||||||

|

* [C&S](https://arxiv.org/pdf/2010.13993)

|

||||||

|

* [HeCo](https://arxiv.org/pdf/2105.09111)

|

||||||

|

|

||||||

|

#### R-GCN (full batch)

|

||||||

|

|

||||||

|

```shell

|

||||||

|

python -m gnnrec.hge.rgcn.train --dataset=acm --epochs=10

|

||||||

|

python -m gnnrec.hge.rgcn.train --dataset=dblp --epochs=10

|

||||||

|

python -m gnnrec.hge.rgcn.train --dataset=ogbn-mag --num-hidden=48

|

||||||

|

python -m gnnrec.hge.rgcn.train --dataset=oag-venue --num-hidden=48 --epochs=30

|

||||||

|

```

|

||||||

|

|

||||||

|

(使用 minibatch 训练准确率就是只有 20% 多,不知道为什么)

|

||||||

|

|

||||||

|

#### 预训练顶点嵌入

|

||||||

|

|

||||||

|

使用 metapath2vec(随机游走 +word2vec)预训练顶点嵌入,作为 GNN 模型的顶点输入特征

|

||||||

|

|

||||||

|

```shell

|

||||||

|

python -m gnnrec.hge.metapath2vec.random_walk model/word2vec/ogbn-mag_corpus.txt

|

||||||

|

python -m gnnrec.hge.metapath2vec.train_word2vec --size=128 --workers=8 model/word2vec/ogbn-mag_corpus.txt model/word2vec/ogbn-mag.model

|

||||||

|

```

|

||||||

|

|

||||||

|

#### HGT

|

||||||

|

|

||||||

|

```shell

|

||||||

|

python -m gnnrec.hge.hgt.train_full --dataset=acm

|

||||||

|

python -m gnnrec.hge.hgt.train_full --dataset=dblp

|

||||||

|

python -m gnnrec.hge.hgt.train --dataset=ogbn-mag --node-embed-path=model/word2vec/ogbn-mag.model --epochs=40

|

||||||

|

python -m gnnrec.hge.hgt.train --dataset=oag-venue --node-embed-path=model/word2vec/oag-cs.model --epochs=40

|

||||||

|

```

|

||||||

|

|

||||||

|

#### HGConv

|

||||||

|

|

||||||

|

```shell

|

||||||

|

python -m gnnrec.hge.hgconv.train_full --dataset=acm --epochs=5

|

||||||

|

python -m gnnrec.hge.hgconv.train_full --dataset=dblp --epochs=20

|

||||||

|

python -m gnnrec.hge.hgconv.train --dataset=ogbn-mag --node-embed-path=model/word2vec/ogbn-mag.model

|

||||||

|

python -m gnnrec.hge.hgconv.train --dataset=oag-venue --node-embed-path=model/word2vec/oag-cs.model

|

||||||

|

```

|

||||||

|

|

||||||

|

#### R-HGNN

|

||||||

|

|

||||||

|

```shell

|

||||||

|

python -m gnnrec.hge.rhgnn.train_full --dataset=acm --num-layers=1 --epochs=15

|

||||||

|

python -m gnnrec.hge.rhgnn.train_full --dataset=dblp --epochs=20

|

||||||

|

python -m gnnrec.hge.rhgnn.train --dataset=ogbn-mag model/word2vec/ogbn-mag.model

|

||||||

|

python -m gnnrec.hge.rhgnn.train --dataset=oag-venue --epochs=50 model/word2vec/oag-cs.model

|

||||||

|

```

|

||||||

|

|

||||||

|

#### C&S

|

||||||

|

|

||||||

|

```shell

|

||||||

|

python -m gnnrec.hge.cs.train --dataset=acm --epochs=5

|

||||||

|

python -m gnnrec.hge.cs.train --dataset=dblp --epochs=5

|

||||||

|

python -m gnnrec.hge.cs.train --dataset=ogbn-mag --prop-graph=data/graph/pos_graph_ogbn-mag_t5.bin

|

||||||

|

python -m gnnrec.hge.cs.train --dataset=oag-venue --prop-graph=data/graph/pos_graph_oag-venue_t5.bin

|

||||||

|

```

|

||||||

|

|

||||||

|

#### HeCo

|

||||||

|

|

||||||

|

```shell

|

||||||

|

python -m gnnrec.hge.heco.train --dataset=ogbn-mag model/word2vec/ogbn-mag.model data/graph/pos_graph_ogbn-mag_t5.bin

|

||||||

|

python -m gnnrec.hge.heco.train --dataset=oag-venue model/word2vec/oag-cs.model data/graph/pos_graph_oag-venue_t5.bin

|

||||||

|

```

|

||||||

|

|

||||||

|

(ACM 和 DBLP 的数据来自 [https://github.com/ZZy979/pytorch-tutorial/tree/master/gnn/heco](https://github.com/ZZy979/pytorch-tutorial/tree/master/gnn/heco) ,准确率和 Micro-F1 相等)

|

||||||

|

|

||||||

|

#### RHCO

|

||||||

|

|

||||||

|

基于对比学习的关系感知异构图神经网络(Relation-aware Heterogeneous Graph Neural Network with Contrastive Learning, RHCO)

|

||||||

|

|

||||||

|

在 HeCo 的基础上改进:

|

||||||

|

|

||||||

|

* 网络结构编码器中的注意力向量改为关系的表示(类似于 R-HGNN)

|

||||||

|

* 正样本选择方式由元路径条数改为预训练的 HGT 计算的注意力权重、训练集使用真实标签

|

||||||

|

* 元路径视图编码器改为正样本图编码器,适配 mini-batch 训练

|

||||||

|

* Loss 增加分类损失,训练方式由无监督改为半监督

|

||||||

|

* 在最后增加 C&S 后处理步骤

|

||||||

|

|

||||||

|

ACM

|

||||||

|

|

||||||

|

```shell

|

||||||

|

python -m gnnrec.hge.hgt.train_full --dataset=acm --save-path=model/hgt/hgt_acm.pt

|

||||||

|

python -m gnnrec.hge.rhco.build_pos_graph_full --dataset=acm --num-samples=5 --use-label model/hgt/hgt_acm.pt data/graph/pos_graph_acm_t5l.bin

|

||||||

|

python -m gnnrec.hge.rhco.train_full --dataset=acm data/graph/pos_graph_acm_t5l.bin

|

||||||

|

```

|

||||||

|

|

||||||

|

DBLP

|

||||||

|

|

||||||

|

```shell

|

||||||

|

python -m gnnrec.hge.hgt.train_full --dataset=dblp --save-path=model/hgt/hgt_dblp.pt

|

||||||

|

python -m gnnrec.hge.rhco.build_pos_graph_full --dataset=dblp --num-samples=5 --use-label model/hgt/hgt_dblp.pt data/graph/pos_graph_dblp_t5l.bin

|

||||||

|

python -m gnnrec.hge.rhco.train_full --dataset=dblp --use-data-pos data/graph/pos_graph_dblp_t5l.bin

|

||||||

|

```

|

||||||

|

|

||||||

|

ogbn-mag(第 3 步如果中断可使用--load-path 参数继续训练)

|

||||||

|

|

||||||

|

```shell

|

||||||

|

python -m gnnrec.hge.hgt.train --dataset=ogbn-mag --node-embed-path=model/word2vec/ogbn-mag.model --epochs=40 --save-path=model/hgt/hgt_ogbn-mag.pt

|

||||||

|

python -m gnnrec.hge.rhco.build_pos_graph --dataset=ogbn-mag --num-samples=5 --use-label model/word2vec/ogbn-mag.model model/hgt/hgt_ogbn-mag.pt data/graph/pos_graph_ogbn-mag_t5l.bin

|

||||||

|

python -m gnnrec.hge.rhco.train --dataset=ogbn-mag --num-hidden=64 --contrast-weight=0.9 model/word2vec/ogbn-mag.model data/graph/pos_graph_ogbn-mag_t5l.bin model/rhco_ogbn-mag_d64_a0.9_t5l.pt

|

||||||

|

python -m gnnrec.hge.rhco.smooth --dataset=ogbn-mag model/word2vec/ogbn-mag.model data/graph/pos_graph_ogbn-mag_t5l.bin model/rhco_ogbn-mag_d64_a0.9_t5l.pt

|

||||||

|

```

|

||||||

|

|

||||||

|

oag-venue

|

||||||

|

|

||||||

|

```shell

|

||||||

|

python -m gnnrec.hge.hgt.train --dataset=oag-venue --node-embed-path=model/word2vec/oag-cs.model --epochs=40 --save-path=model/hgt/hgt_oag-venue.pt

|

||||||

|

python -m gnnrec.hge.rhco.build_pos_graph --dataset=oag-venue --num-samples=5 --use-label model/word2vec/oag-cs.model model/hgt/hgt_oag-venue.pt data/graph/pos_graph_oag-venue_t5l.bin

|

||||||

|

python -m gnnrec.hge.rhco.train --dataset=oag-venue --num-hidden=64 --contrast-weight=0.9 model/word2vec/oag-cs.model data/graph/pos_graph_oag-venue_t5l.bin model/rhco_oag-venue.pt

|

||||||

|

python -m gnnrec.hge.rhco.smooth --dataset=oag-venue model/word2vec/oag-cs.model data/graph/pos_graph_oag-venue_t5l.bin model/rhco_oag-venue.pt

|

||||||

|

```

|

||||||

|

|

||||||

|

消融实验

|

||||||

|

|

||||||

|

```shell

|

||||||

|

python -m gnnrec.hge.rhco.train --dataset=ogbn-mag --model=RHCO_sc model/word2vec/ogbn-mag.model data/graph/pos_graph_ogbn-mag_t5l.bin model/rhco_sc_ogbn-mag.pt

|

||||||

|

python -m gnnrec.hge.rhco.train --dataset=ogbn-mag --model=RHCO_pg model/word2vec/ogbn-mag.model data/graph/pos_graph_ogbn-mag_t5l.bin model/rhco_pg_ogbn-mag.pt

|

||||||

|

```

|

||||||

|

|

||||||

|

### 实验结果

|

||||||

|

|

||||||

|

[顶点分类](gnnrec/hge/result/node_classification.csv)

|

||||||

|

|

||||||

|

[参数敏感性分析](gnnrec/hge/result/param_analysis.csv)

|

||||||

|

|

||||||

|

[消融实验](gnnrec/hge/result/ablation_study.csv)

|

||||||

|

|

|

||||||

|

|

@ -0,0 +1,16 @@

|

||||||

|

"""

|

||||||

|

ASGI config for academic_graph project.

|

||||||

|

|

||||||

|

It exposes the ASGI callable as a module-level variable named ``application``.

|

||||||

|

|

||||||

|

For more information on this file, see

|

||||||

|

https://docs.djangoproject.com/en/3.2/howto/deployment/asgi/

|

||||||

|

"""

|

||||||

|

|

||||||

|

import os

|

||||||

|

|

||||||

|

from django.core.asgi import get_asgi_application

|

||||||

|

|

||||||

|

os.environ.setdefault('DJANGO_SETTINGS_MODULE', 'academic_graph.settings')

|

||||||

|

|

||||||

|

application = get_asgi_application()

|

||||||

|

|

@ -0,0 +1,136 @@

|

||||||

|

"""

|

||||||

|

Django settings for academic_graph project.

|

||||||

|

|

||||||

|

Generated by 'django-admin startproject' using Django 3.2.8.

|

||||||

|

|

||||||

|

For more information on this file, see

|

||||||

|

https://docs.djangoproject.com/en/3.2/topics/settings/

|

||||||

|

|

||||||

|

For the full list of settings and their values, see

|

||||||

|

https://docs.djangoproject.com/en/3.2/ref/settings/

|

||||||

|

"""

|

||||||

|

import os

|

||||||

|

from pathlib import Path

|

||||||

|

|

||||||

|

# Build paths inside the project like this: BASE_DIR / 'subdir'.

|

||||||

|

BASE_DIR = Path(__file__).resolve().parent.parent.parent

|

||||||

|

|

||||||

|

|

||||||

|

# Quick-start development settings - unsuitable for production

|

||||||

|

# See https://docs.djangoproject.com/en/3.2/howto/deployment/checklist/

|

||||||

|

|

||||||

|

# SECURITY WARNING: keep the secret key used in production secret!

|

||||||

|

SECRET_KEY = os.environ['SECRET_KEY']

|

||||||

|

|

||||||

|

# SECURITY WARNING: don't run with debug turned on in production!

|

||||||

|

DEBUG = False

|

||||||

|

|

||||||

|

ALLOWED_HOSTS = ['localhost', '127.0.0.1', '[::1]', '10.2.4.100']

|

||||||

|

|

||||||

|

|

||||||

|

# Application definition

|

||||||

|

|

||||||

|

INSTALLED_APPS = [

|

||||||

|

'django.contrib.admin',

|

||||||

|

'django.contrib.auth',

|

||||||

|

'django.contrib.contenttypes',

|

||||||

|

'django.contrib.sessions',

|

||||||

|

'django.contrib.messages',

|

||||||

|

'django.contrib.staticfiles',

|

||||||

|

'rank',

|

||||||

|

]

|

||||||

|

|

||||||

|

MIDDLEWARE = [

|

||||||

|

'django.middleware.security.SecurityMiddleware',

|

||||||

|

'django.contrib.sessions.middleware.SessionMiddleware',

|

||||||

|

'django.middleware.common.CommonMiddleware',

|

||||||

|

'django.middleware.csrf.CsrfViewMiddleware',

|

||||||

|

'django.contrib.auth.middleware.AuthenticationMiddleware',

|

||||||

|

'django.contrib.messages.middleware.MessageMiddleware',

|

||||||

|

'django.middleware.clickjacking.XFrameOptionsMiddleware',

|

||||||

|

]

|

||||||

|

|

||||||

|

ROOT_URLCONF = 'academic_graph.urls'

|

||||||

|

|

||||||

|

TEMPLATES = [

|

||||||

|

{

|

||||||

|

'BACKEND': 'django.template.backends.django.DjangoTemplates',

|

||||||

|

'DIRS': [],

|

||||||

|

'APP_DIRS': True,

|

||||||

|

'OPTIONS': {

|

||||||

|

'context_processors': [

|

||||||

|

'django.template.context_processors.debug',

|

||||||

|

'django.template.context_processors.request',

|

||||||

|

'django.contrib.auth.context_processors.auth',

|

||||||

|

'django.contrib.messages.context_processors.messages',

|

||||||

|

],

|

||||||

|

},

|

||||||

|

},

|

||||||

|

]

|

||||||

|

|

||||||

|

WSGI_APPLICATION = 'academic_graph.wsgi.application'

|

||||||

|

|

||||||

|

|

||||||

|

# Database

|

||||||

|

# https://docs.djangoproject.com/en/3.2/ref/settings/#databases

|

||||||

|

|

||||||

|

DATABASES = {

|

||||||

|

'default': {

|

||||||

|

'ENGINE': 'django.db.backends.mysql',

|

||||||

|

'OPTIONS': {

|

||||||

|

'read_default_file': '.mylogin.cnf',

|

||||||

|

'charset': 'utf8mb4',

|

||||||

|

},

|

||||||

|

}

|

||||||

|

}

|

||||||

|

|

||||||

|

|

||||||

|

# Password validation

|

||||||

|

# https://docs.djangoproject.com/en/3.2/ref/settings/#auth-password-validators

|

||||||

|

|

||||||

|

AUTH_PASSWORD_VALIDATORS = [

|

||||||

|

{

|

||||||

|

'NAME': 'django.contrib.auth.password_validation.UserAttributeSimilarityValidator',

|

||||||

|

},

|

||||||

|

{

|

||||||

|

'NAME': 'django.contrib.auth.password_validation.MinimumLengthValidator',

|

||||||

|

},

|

||||||

|

{

|

||||||

|

'NAME': 'django.contrib.auth.password_validation.CommonPasswordValidator',

|

||||||

|

},

|

||||||

|

{

|

||||||

|

'NAME': 'django.contrib.auth.password_validation.NumericPasswordValidator',

|

||||||

|

},

|

||||||

|

]

|

||||||

|

|

||||||

|

|

||||||

|

# Internationalization

|

||||||

|

# https://docs.djangoproject.com/en/3.2/topics/i18n/

|

||||||

|

|

||||||

|

LANGUAGE_CODE = 'zh-hans'

|

||||||

|

|

||||||

|

TIME_ZONE = 'Asia/Shanghai'

|

||||||

|

|

||||||

|

USE_I18N = True

|

||||||

|

|

||||||

|

USE_L10N = True

|

||||||

|

|

||||||

|

USE_TZ = True

|

||||||

|

|

||||||

|

|

||||||

|

# Static files (CSS, JavaScript, Images)

|

||||||

|

# https://docs.djangoproject.com/en/3.2/howto/static-files/

|

||||||

|

|

||||||

|

STATIC_URL = '/static/'

|

||||||

|

STATIC_ROOT = BASE_DIR / 'static'

|

||||||

|

|

||||||

|

# Default primary key field type

|

||||||

|

# https://docs.djangoproject.com/en/3.2/ref/settings/#default-auto-field

|

||||||

|

|

||||||

|

DEFAULT_AUTO_FIELD = 'django.db.models.BigAutoField'

|

||||||

|

|

||||||

|

LOGIN_URL = 'rank:login'

|

||||||

|

|

||||||

|

# 自定义设置

|

||||||

|

PAGE_SIZE = 20

|

||||||

|

TESTING = True

|

||||||

|

|

@ -0,0 +1,6 @@

|

||||||

|

from .common import * # noqa

|

||||||

|

|

||||||

|

DEBUG = True

|

||||||

|

|

||||||

|

# 自定义设置

|

||||||

|

TESTING = False

|

||||||

|

|

@ -0,0 +1,6 @@

|

||||||

|

from .common import * # noqa

|

||||||

|

|

||||||

|

DEBUG = False

|

||||||

|

|

||||||

|

# 自定义设置

|

||||||

|

TESTING = False

|

||||||

|

|

@ -0,0 +1,11 @@

|

||||||

|

from .common import * # noqa

|

||||||

|

|

||||||

|

DATABASES = {

|

||||||

|

'default': {

|

||||||

|

'ENGINE': 'django.db.backends.sqlite3',

|

||||||

|

'NAME': BASE_DIR / 'test.sqlite3',

|

||||||

|

}

|

||||||

|

}

|

||||||

|

|

||||||

|

# 自定义设置

|

||||||

|

TESTING = True

|

||||||

|

|

@ -0,0 +1,25 @@

|

||||||

|

"""academic_graph URL Configuration

|

||||||

|

|

||||||

|

The `urlpatterns` list routes URLs to views. For more information please see:

|

||||||

|

https://docs.djangoproject.com/en/3.2/topics/http/urls/

|

||||||

|

Examples:

|

||||||

|

Function views

|

||||||

|

1. Add an import: from my_app import views

|

||||||

|

2. Add a URL to urlpatterns: path('', views.home, name='home')

|

||||||

|

Class-based views

|

||||||

|

1. Add an import: from other_app.views import Home

|

||||||

|

2. Add a URL to urlpatterns: path('', Home.as_view(), name='home')

|

||||||

|

Including another URLconf

|

||||||

|

1. Import the include() function: from django.urls import include, path

|

||||||

|

2. Add a URL to urlpatterns: path('blog/', include('blog.urls'))

|

||||||

|

"""

|

||||||

|

from django.conf import settings

|

||||||

|

from django.contrib import admin

|

||||||

|

from django.urls import path, include

|

||||||

|

from django.views import static

|

||||||

|

|

||||||

|

urlpatterns = [

|

||||||

|

path('admin/', admin.site.urls),

|

||||||

|

path('static/<path:path>', static.serve, {'document_root': settings.STATIC_ROOT}, name='static'),

|

||||||

|

path('rank/', include('rank.urls')),

|

||||||

|

]

|

||||||

|

|

@ -0,0 +1,16 @@

|

||||||

|

"""

|

||||||

|

WSGI config for academic_graph project.

|

||||||

|

|

||||||

|

It exposes the WSGI callable as a module-level variable named ``application``.

|

||||||

|

|

||||||

|

For more information on this file, see

|

||||||

|

https://docs.djangoproject.com/en/3.2/howto/deployment/wsgi/

|

||||||

|

"""

|

||||||

|

|

||||||

|

import os

|

||||||

|

|

||||||

|

from django.core.wsgi import get_wsgi_application

|

||||||

|

|

||||||

|

os.environ.setdefault('DJANGO_SETTINGS_MODULE', 'academic_graph.settings')

|

||||||

|

|

||||||

|

application = get_wsgi_application()

|

||||||

|

|

@ -0,0 +1,10 @@

|

||||||

|

from pathlib import Path

|

||||||

|

|

||||||

|

# 项目根目录

|

||||||

|

BASE_DIR = Path(__file__).resolve().parent.parent

|

||||||

|

|

||||||

|

# 数据集目录

|

||||||

|

DATA_DIR = BASE_DIR / 'data'

|

||||||

|

|

||||||

|

# 模型保存目录

|

||||||

|

MODEL_DIR = BASE_DIR / 'model'

|

||||||

|

|

@ -0,0 +1,113 @@

|

||||||

|

import dgl.function as fn

|

||||||

|

import torch

|

||||||

|

import torch.nn as nn

|

||||||

|

|

||||||

|

|

||||||

|

class LabelPropagation(nn.Module):

|

||||||

|

|

||||||

|

def __init__(self, num_layers, alpha, norm):

|

||||||

|

"""标签传播模型

|

||||||

|

|

||||||

|

.. math::

|

||||||

|

Y^{(t+1)} = \\alpha SY^{(t)} + (1-\\alpha)Y, Y^{(0)} = Y

|

||||||

|

|

||||||

|

:param num_layers: int 传播层数

|

||||||

|

:param alpha: float α参数

|

||||||

|

:param norm: str 邻接矩阵归一化方式

|

||||||

|

'left': S=D^{-1}A, 'right': S=AD^{-1}, 'both': S=D^{-1/2}AD^{-1/2}

|

||||||

|

"""

|

||||||

|

super().__init__()

|

||||||

|

self.num_layers = num_layers

|

||||||

|

self.alpha = alpha

|

||||||

|

self.norm = norm

|

||||||

|

|

||||||

|

@torch.no_grad()

|

||||||

|

def forward(self, g, labels, mask=None, post_step=None):

|

||||||

|

"""

|

||||||

|

:param g: DGLGraph 无向图

|

||||||

|

:param labels: tensor(N, C) one-hot标签

|

||||||

|

:param mask: tensor(N), optional 有标签顶点mask

|

||||||

|

:param post_step: callable, optional f: tensor(N, C) -> tensor(N, C)

|

||||||

|

:return: tensor(N, C) 预测标签概率

|

||||||

|

"""

|

||||||

|

with g.local_scope():

|

||||||

|

if mask is not None:

|

||||||

|

y = torch.zeros_like(labels)

|

||||||

|

y[mask] = labels[mask]

|

||||||

|

else:

|

||||||

|

y = labels

|

||||||

|

|

||||||

|

residual = (1 - self.alpha) * y

|

||||||

|

degs = g.in_degrees().float().clamp(min=1)

|

||||||

|

norm = torch.pow(degs, -0.5 if self.norm == 'both' else -1).unsqueeze(1) # (N, 1)

|

||||||

|

for _ in range(self.num_layers):

|

||||||

|

if self.norm in ('both', 'right'):

|

||||||

|

y *= norm

|

||||||

|

g.ndata['h'] = y

|

||||||

|

g.update_all(fn.copy_u('h', 'm'), fn.sum('m', 'h'))

|

||||||

|

y = self.alpha * g.ndata.pop('h')

|

||||||

|

if self.norm in ('both', 'left'):

|

||||||

|

y *= norm

|

||||||

|

y += residual

|

||||||

|

if post_step is not None:

|

||||||

|

y = post_step(y)

|

||||||

|

return y

|

||||||

|

|

||||||

|

|

||||||

|

class CorrectAndSmooth(nn.Module):

|

||||||

|

|

||||||

|

def __init__(

|

||||||

|

self, num_correct_layers, correct_alpha, correct_norm,

|

||||||

|

num_smooth_layers, smooth_alpha, smooth_norm, scale=1.0):

|

||||||

|

"""C&S模型"""

|

||||||

|

super().__init__()

|

||||||

|

self.correct_prop = LabelPropagation(num_correct_layers, correct_alpha, correct_norm)

|

||||||

|

self.smooth_prop = LabelPropagation(num_smooth_layers, smooth_alpha, smooth_norm)

|

||||||

|

self.scale = scale

|

||||||

|

|

||||||

|

def correct(self, g, labels, base_pred, mask):

|

||||||

|

"""Correct步,修正基础预测中的误差

|

||||||

|

|

||||||

|

:param g: DGLGraph 无向图

|

||||||

|

:param labels: tensor(N, C) one-hot标签

|

||||||

|

:param base_pred: tensor(N, C) 基础预测

|

||||||

|

:param mask: tensor(N) 训练集mask

|

||||||

|

:return: tensor(N, C) 修正后的预测

|

||||||

|

"""

|

||||||

|

err = torch.zeros_like(base_pred) # (N, C)

|

||||||

|

err[mask] = labels[mask] - base_pred[mask]

|

||||||

|

|

||||||

|

# FDiff-scale: 对训练集固定误差

|

||||||

|

def fix_input(y):

|

||||||

|

y[mask] = err[mask]

|

||||||

|

return y

|

||||||

|

|

||||||

|

smoothed_err = self.correct_prop(g, err, post_step=fix_input) # \hat{E}

|

||||||

|

corrected_pred = base_pred + self.scale * smoothed_err # Z^{(r)}

|

||||||

|

corrected_pred[corrected_pred.isnan()] = base_pred[corrected_pred.isnan()]

|

||||||

|

return corrected_pred

|

||||||

|

|

||||||

|

def smooth(self, g, labels, corrected_pred, mask):

|

||||||

|

"""Smooth步,平滑最终预测

|

||||||

|

|

||||||

|

:param g: DGLGraph 无向图

|

||||||

|

:param labels: tensor(N, C) one-hot标签

|

||||||

|

:param corrected_pred: tensor(N, C) 修正后的预测

|

||||||

|

:param mask: tensor(N) 训练集mask

|

||||||

|

:return: tensor(N, C) 最终预测

|

||||||

|

"""

|

||||||

|

guess = corrected_pred

|

||||||

|

guess[mask] = labels[mask]

|

||||||

|

return self.smooth_prop(g, guess)

|

||||||

|

|

||||||

|

def forward(self, g, labels, base_pred, mask):

|

||||||

|

"""

|

||||||

|

:param g: DGLGraph 无向图

|

||||||

|

:param labels: tensor(N, C) one-hot标签

|

||||||

|

:param base_pred: tensor(N, C) 基础预测

|

||||||

|

:param mask: tensor(N) 训练集mask

|

||||||

|

:return: tensor(N, C) 最终预测

|

||||||

|

"""

|

||||||

|

# corrected_pred = self.correct(g, labels, base_pred, mask)

|

||||||

|

corrected_pred = base_pred

|

||||||

|

return self.smooth(g, labels, corrected_pred, mask)

|

||||||

|

|

@ -0,0 +1,101 @@

|

||||||

|

import argparse

|

||||||

|

|

||||||

|

import dgl

|

||||||

|

import torch

|

||||||

|

import torch.nn as nn

|

||||||

|

import torch.nn.functional as F

|

||||||

|

import torch.optim as optim

|

||||||

|

|

||||||

|

from gnnrec.hge.cs.model import CorrectAndSmooth

|

||||||

|

from gnnrec.hge.utils import set_random_seed, get_device, load_data, calc_metrics, METRICS_STR

|

||||||

|

|

||||||

|

|

||||||

|

def train_base_model(base_model, feats, labels, train_idx, val_idx, test_idx, evaluator, args):

|

||||||

|

print('Training base model...')

|

||||||

|

optimizer = optim.Adam(base_model.parameters(), lr=args.lr)

|

||||||

|

for epoch in range(args.epochs):

|

||||||

|

base_model.train()

|

||||||

|

logits = base_model(feats)

|

||||||

|

loss = F.cross_entropy(logits[train_idx], labels[train_idx])

|

||||||

|

optimizer.zero_grad()

|

||||||

|

loss.backward()

|

||||||

|

optimizer.step()

|

||||||

|

print(('Epoch {:d} | Loss {:.4f} | ' + METRICS_STR).format(

|

||||||

|

epoch, loss.item(),

|

||||||

|

*evaluate(base_model, feats, labels, train_idx, val_idx, test_idx, evaluator)

|

||||||

|

))

|

||||||

|

|

||||||

|

|

||||||

|

@torch.no_grad()

|

||||||

|

def evaluate(model, feats, labels, train_idx, val_idx, test_idx, evaluator):

|

||||||

|

model.eval()

|

||||||

|

logits = model(feats)

|

||||||

|

return calc_metrics(logits, labels, train_idx, val_idx, test_idx, evaluator)

|

||||||

|

|

||||||

|

|

||||||

|

def correct_and_smooth(base_model, g, feats, labels, train_idx, val_idx, test_idx, evaluator, args):

|

||||||

|

print('Training C&S...')

|

||||||

|

base_model.eval()

|

||||||

|

base_pred = base_model(feats).softmax(dim=1) # 注意要softmax

|

||||||

|

|

||||||

|

cs = CorrectAndSmooth(

|

||||||

|

args.num_correct_layers, args.correct_alpha, args.correct_norm,

|

||||||

|

args.num_smooth_layers, args.smooth_alpha, args.smooth_norm, args.scale

|

||||||

|

)

|

||||||

|

mask = torch.cat([train_idx, val_idx])

|

||||||

|

logits = cs(g, F.one_hot(labels).float(), base_pred, mask)

|

||||||

|

_, _, test_acc, _, _, test_f1 = calc_metrics(logits, labels, train_idx, val_idx, test_idx, evaluator)

|

||||||

|

print('Test Acc {:.4f} | Test Macro-F1 {:.4f}'.format(test_acc, test_f1))

|

||||||

|

|

||||||

|

|

||||||

|

def train(args):

|

||||||

|

set_random_seed(args.seed)

|

||||||

|

device = get_device(args.device)

|

||||||

|

data, _, feat, labels, _, train_idx, val_idx, test_idx, evaluator = \

|

||||||

|

load_data(args.dataset, device)

|

||||||

|

feat = (feat - feat.mean(dim=0)) / feat.std(dim=0)

|

||||||

|

# 标签传播图

|

||||||

|

if args.dataset in ('acm', 'dblp'):

|

||||||

|

pos_v, pos_u = data.pos

|

||||||

|

pg = dgl.graph((pos_u, pos_v), device=device)

|

||||||

|

else:

|

||||||

|

pg = dgl.load_graphs(args.prop_graph)[0][-1].to(device)

|

||||||

|

|

||||||

|

if args.dataset == 'oag-venue':

|

||||||

|

labels[labels == -1] = 0

|

||||||

|

|

||||||

|

base_model = nn.Linear(feat.shape[1], data.num_classes).to(device)

|

||||||

|

train_base_model(base_model, feat, labels, train_idx, val_idx, test_idx, evaluator, args)

|

||||||

|

correct_and_smooth(base_model, pg, feat, labels, train_idx, val_idx, test_idx, evaluator, args)

|

||||||

|

|

||||||

|

|

||||||

|

def main():

|

||||||

|

parser = argparse.ArgumentParser(description='训练C&S模型')

|

||||||

|

parser.add_argument('--seed', type=int, default=0, help='随机数种子')

|

||||||

|

parser.add_argument('--device', type=int, default=0, help='GPU设备')

|

||||||

|

parser.add_argument('--dataset', choices=['acm', 'dblp', 'ogbn-mag', 'oag-venue'], default='ogbn-mag', help='数据集')

|

||||||

|

# 基础模型

|

||||||

|

parser.add_argument('--epochs', type=int, default=300, help='基础模型训练epoch数')

|

||||||

|

parser.add_argument('--lr', type=float, default=0.01, help='基础模型学习率')

|

||||||

|

# C&S

|

||||||

|

parser.add_argument('--prop-graph', help='标签传播图所在路径')

|

||||||

|

parser.add_argument('--num-correct-layers', type=int, default=50, help='Correct步骤传播层数')

|

||||||

|

parser.add_argument('--correct-alpha', type=float, default=0.5, help='Correct步骤α值')

|

||||||

|

parser.add_argument(

|

||||||

|

'--correct-norm', choices=['left', 'right', 'both'], default='both',

|

||||||

|

help='Correct步骤归一化方式'

|

||||||

|

)

|

||||||

|

parser.add_argument('--num-smooth-layers', type=int, default=50, help='Smooth步骤传播层数')

|

||||||

|

parser.add_argument('--smooth-alpha', type=float, default=0.5, help='Smooth步骤α值')

|

||||||

|

parser.add_argument(

|

||||||

|

'--smooth-norm', choices=['left', 'right', 'both'], default='both',

|

||||||

|

help='Smooth步骤归一化方式'

|

||||||

|

)

|

||||||

|

parser.add_argument('--scale', type=float, default=20, help='放缩系数')

|

||||||

|

args = parser.parse_args()

|

||||||

|

print(args)

|

||||||

|

train(args)

|

||||||

|

|

||||||

|

|

||||||

|

if __name__ == '__main__':

|

||||||

|

main()

|

||||||

|

|

@ -0,0 +1 @@

|

||||||

|

from .heco import ACMDataset, DBLPDataset

|

||||||

|

|

@ -0,0 +1,204 @@

|

||||||

|

import os

|

||||||

|

import shutil

|

||||||

|

import zipfile

|

||||||

|

|

||||||

|

import dgl

|

||||||

|

import numpy as np

|

||||||

|

import pandas as pd

|

||||||

|

import scipy.sparse as sp

|

||||||

|

import torch

|

||||||

|

from dgl.data import DGLDataset

|

||||||

|

from dgl.data.utils import download, save_graphs, save_info, load_graphs, load_info, \

|

||||||

|

generate_mask_tensor, idx2mask

|

||||||

|

|

||||||

|

|

||||||

|

class HeCoDataset(DGLDataset):

|

||||||

|

"""HeCo模型使用的数据集基类

|

||||||

|

|

||||||

|

论文链接:https://arxiv.org/pdf/2105.09111

|

||||||

|

|

||||||

|

类属性

|

||||||

|

-----

|

||||||

|

* num_classes: 类别数

|

||||||

|

* metapaths: 使用的元路径

|

||||||

|

* predict_ntype: 目标顶点类型

|

||||||

|

* pos: (tensor(E_pos), tensor(E_pos)) 目标顶点正样本对,pos[1][i]是pos[0][i]的正样本

|

||||||

|

"""

|

||||||

|

|

||||||

|

def __init__(self, name, ntypes):

|

||||||

|

url = 'https://api.github.com/repos/liun-online/HeCo/zipball/main'

|

||||||

|

self._ntypes = {ntype[0]: ntype for ntype in ntypes}

|

||||||

|

super().__init__(name + '-heco', url)

|

||||||

|

|

||||||

|

def download(self):

|

||||||

|

file_path = os.path.join(self.raw_dir, 'HeCo-main.zip')

|

||||||

|

if not os.path.exists(file_path):

|

||||||

|

download(self.url, path=file_path)

|

||||||

|

with zipfile.ZipFile(file_path, 'r') as f:

|

||||||

|

f.extractall(self.raw_dir)

|

||||||

|

shutil.copytree(

|

||||||

|

os.path.join(self.raw_dir, 'HeCo-main', 'data', self.name.split('-')[0]),

|

||||||

|

os.path.join(self.raw_path)

|

||||||

|

)

|

||||||

|

|

||||||

|

def save(self):

|

||||||

|

save_graphs(os.path.join(self.save_path, self.name + '_dgl_graph.bin'), [self.g])

|

||||||

|

save_info(os.path.join(self.raw_path, self.name + '_pos.pkl'), {'pos_i': self.pos_i, 'pos_j': self.pos_j})

|

||||||

|

|

||||||

|

def load(self):

|

||||||

|

graphs, _ = load_graphs(os.path.join(self.save_path, self.name + '_dgl_graph.bin'))

|

||||||

|

self.g = graphs[0]

|

||||||

|

ntype = self.predict_ntype

|

||||||

|

self._num_classes = self.g.nodes[ntype].data['label'].max().item() + 1

|

||||||

|

for k in ('train_mask', 'val_mask', 'test_mask'):

|

||||||

|

self.g.nodes[ntype].data[k] = self.g.nodes[ntype].data[k].bool()

|

||||||

|

info = load_info(os.path.join(self.raw_path, self.name + '_pos.pkl'))

|

||||||

|

self.pos_i, self.pos_j = info['pos_i'], info['pos_j']

|

||||||

|

|

||||||

|

def process(self):

|

||||||

|

self.g = dgl.heterograph(self._read_edges())

|

||||||

|

|

||||||

|

feats = self._read_feats()

|

||||||

|

for ntype, feat in feats.items():

|

||||||

|

self.g.nodes[ntype].data['feat'] = feat

|

||||||

|

|

||||||

|

labels = torch.from_numpy(np.load(os.path.join(self.raw_path, 'labels.npy'))).long()

|

||||||

|

self._num_classes = labels.max().item() + 1

|

||||||

|

self.g.nodes[self.predict_ntype].data['label'] = labels

|

||||||

|

|

||||||

|

n = self.g.num_nodes(self.predict_ntype)

|

||||||

|

for split in ('train', 'val', 'test'):

|

||||||

|

idx = np.load(os.path.join(self.raw_path, f'{split}_60.npy'))

|

||||||

|

mask = generate_mask_tensor(idx2mask(idx, n))

|

||||||

|

self.g.nodes[self.predict_ntype].data[f'{split}_mask'] = mask

|

||||||

|

|

||||||

|

pos_i, pos_j = sp.load_npz(os.path.join(self.raw_path, 'pos.npz')).nonzero()

|

||||||

|

self.pos_i, self.pos_j = torch.from_numpy(pos_i).long(), torch.from_numpy(pos_j).long()

|

||||||

|

|

||||||

|

def _read_edges(self):

|

||||||

|

edges = {}

|

||||||

|

for file in os.listdir(self.raw_path):

|

||||||

|

name, ext = os.path.splitext(file)

|

||||||

|

if ext == '.txt':

|

||||||

|

u, v = name

|

||||||

|

e = pd.read_csv(os.path.join(self.raw_path, f'{u}{v}.txt'), sep='\t', names=[u, v])

|

||||||

|

src = e[u].to_list()

|

||||||

|

dst = e[v].to_list()

|

||||||

|

edges[(self._ntypes[u], f'{u}{v}', self._ntypes[v])] = (src, dst)

|

||||||

|

edges[(self._ntypes[v], f'{v}{u}', self._ntypes[u])] = (dst, src)

|

||||||

|

return edges

|

||||||

|

|

||||||

|

def _read_feats(self):

|

||||||

|

feats = {}

|

||||||

|

for u in self._ntypes:

|

||||||

|

file = os.path.join(self.raw_path, f'{u}_feat.npz')

|

||||||

|

if os.path.exists(file):

|

||||||

|

feats[self._ntypes[u]] = torch.from_numpy(sp.load_npz(file).toarray()).float()

|

||||||

|

return feats

|

||||||

|

|

||||||

|

def has_cache(self):

|

||||||

|

return os.path.exists(os.path.join(self.save_path, self.name + '_dgl_graph.bin'))

|

||||||

|

|

||||||

|

def __getitem__(self, idx):

|

||||||

|

if idx != 0:

|

||||||

|

raise IndexError('This dataset has only one graph')

|

||||||

|

return self.g

|

||||||

|

|

||||||

|

def __len__(self):

|

||||||

|

return 1

|

||||||

|

|

||||||

|

@property

|

||||||

|

def num_classes(self):

|

||||||

|

return self._num_classes

|

||||||

|

|

||||||

|

@property

|

||||||

|

def metapaths(self):

|

||||||

|

raise NotImplementedError

|

||||||

|

|

||||||

|

@property

|

||||||

|

def predict_ntype(self):

|

||||||

|

raise NotImplementedError

|

||||||

|

|

||||||

|

@property

|

||||||

|

def pos(self):

|

||||||

|

return self.pos_i, self.pos_j

|

||||||

|

|

||||||

|

|

||||||

|

class ACMDataset(HeCoDataset):

|

||||||

|

"""ACM数据集

|

||||||

|

|

||||||

|

统计数据

|

||||||

|

-----

|

||||||

|

* 顶点:4019 paper, 7167 author, 60 subject

|

||||||

|

* 边:13407 paper-author, 4019 paper-subject

|

||||||

|

* 目标顶点类型:paper

|

||||||

|

* 类别数:3

|

||||||

|

* 顶点划分:180 train, 1000 valid, 1000 test

|

||||||

|

|

||||||

|

paper顶点特征

|

||||||

|

-----

|

||||||

|

* feat: tensor(N_paper, 1902)

|

||||||

|

* label: tensor(N_paper) 0~2

|

||||||

|

* train_mask, val_mask, test_mask: tensor(N_paper)

|

||||||

|

|

||||||

|

author顶点特征

|

||||||

|

-----

|

||||||

|

* feat: tensor(7167, 1902)

|

||||||

|

"""

|

||||||

|

|

||||||

|

def __init__(self):

|

||||||

|

super().__init__('acm', ['paper', 'author', 'subject'])

|

||||||

|

|

||||||

|

@property

|

||||||

|

def metapaths(self):

|

||||||

|

return [['pa', 'ap'], ['ps', 'sp']]

|

||||||

|

|

||||||

|

@property

|

||||||

|

def predict_ntype(self):

|

||||||

|

return 'paper'

|

||||||

|

|

||||||

|

|

||||||

|

class DBLPDataset(HeCoDataset):

|

||||||

|

"""DBLP数据集

|

||||||

|

|

||||||

|

统计数据

|

||||||

|

-----

|

||||||

|

* 顶点:4057 author, 14328 paper, 20 conference, 7723 term

|

||||||

|

* 边:19645 paper-author, 14328 paper-conference, 85810 paper-term

|

||||||

|

* 目标顶点类型:author

|

||||||

|

* 类别数:4

|

||||||

|

* 顶点划分:240 train, 1000 valid, 1000 test

|

||||||

|

|

||||||

|

author顶点特征

|

||||||

|

-----

|

||||||

|

* feat: tensor(N_author, 334)

|

||||||

|

* label: tensor(N_author) 0~3

|

||||||

|

* train_mask, val_mask, test_mask: tensor(N_author)

|

||||||

|

|

||||||

|

paper顶点特征

|

||||||

|

-----

|

||||||

|

* feat: tensor(14328, 4231)

|

||||||

|

|

||||||

|

term顶点特征

|

||||||

|

-----

|

||||||

|

* feat: tensor(7723, 50)

|

||||||

|

"""

|

||||||

|

|

||||||

|

def __init__(self):

|

||||||

|

super().__init__('dblp', ['author', 'paper', 'conference', 'term'])

|

||||||

|

|

||||||

|

def _read_feats(self):

|

||||||

|

feats = {}

|

||||||

|

for u in 'ap':

|

||||||

|

file = os.path.join(self.raw_path, f'{u}_feat.npz')

|

||||||

|

feats[self._ntypes[u]] = torch.from_numpy(sp.load_npz(file).toarray()).float()

|

||||||

|

feats['term'] = torch.from_numpy(np.load(os.path.join(self.raw_path, 't_feat.npz'))).float()

|

||||||

|

return feats

|

||||||

|

|

||||||

|

@property

|

||||||

|

def metapaths(self):

|

||||||

|

return [['ap', 'pa'], ['ap', 'pc', 'cp', 'pa'], ['ap', 'pt', 'tp', 'pa']]

|

||||||

|

|

||||||

|

@property

|

||||||

|

def predict_ntype(self):

|

||||||

|

return 'author'

|

||||||

|

|

@ -0,0 +1,269 @@

|

||||||

|

import dgl.function as fn

|

||||||

|

import torch

|

||||||

|

import torch.nn as nn

|

||||||

|

import torch.nn.functional as F

|

||||||

|

from dgl.nn import GraphConv

|

||||||

|

from dgl.ops import edge_softmax

|

||||||

|

|

||||||

|

|

||||||

|

class HeCoGATConv(nn.Module):

|

||||||

|

|

||||||

|

def __init__(self, hidden_dim, attn_drop=0.0, negative_slope=0.01, activation=None):

|

||||||

|

"""HeCo作者代码中使用的GAT

|

||||||

|

|

||||||

|

:param hidden_dim: int 隐含特征维数

|

||||||

|

:param attn_drop: float 注意力dropout

|

||||||

|

:param negative_slope: float, optional LeakyReLU负斜率,默认为0.01

|

||||||

|

:param activation: callable, optional 激活函数,默认为None

|

||||||

|

"""

|

||||||

|

super().__init__()

|

||||||

|

self.attn_l = nn.Parameter(torch.FloatTensor(1, hidden_dim))

|

||||||

|

self.attn_r = nn.Parameter(torch.FloatTensor(1, hidden_dim))

|

||||||

|

self.attn_drop = nn.Dropout(attn_drop)

|

||||||

|

self.leaky_relu = nn.LeakyReLU(negative_slope)

|

||||||

|

self.activation = activation

|

||||||

|

self.reset_parameters()

|

||||||

|

|

||||||

|

def reset_parameters(self):

|

||||||

|

gain = nn.init.calculate_gain('relu')

|

||||||

|

nn.init.xavier_normal_(self.attn_l, gain)

|

||||||

|

nn.init.xavier_normal_(self.attn_r, gain)

|

||||||

|

|

||||||

|

def forward(self, g, feat_src, feat_dst):

|

||||||

|

"""

|

||||||

|

:param g: DGLGraph 邻居-目标顶点二分图

|

||||||

|

:param feat_src: tensor(N_src, d) 邻居顶点输入特征

|

||||||

|

:param feat_dst: tensor(N_dst, d) 目标顶点输入特征

|

||||||

|

:return: tensor(N_dst, d) 目标顶点输出特征

|

||||||

|

"""

|

||||||

|

with g.local_scope():

|

||||||

|

# HeCo作者代码中使用attn_drop的方式与原始GAT不同,这样是不对的,却能顶点聚类提升性能……

|

||||||

|

attn_l = self.attn_drop(self.attn_l)

|

||||||

|

attn_r = self.attn_drop(self.attn_r)

|

||||||

|

el = (feat_src * attn_l).sum(dim=-1).unsqueeze(dim=-1) # (N_src, 1)

|

||||||

|

er = (feat_dst * attn_r).sum(dim=-1).unsqueeze(dim=-1) # (N_dst, 1)

|

||||||

|

g.srcdata.update({'ft': feat_src, 'el': el})

|

||||||

|

g.dstdata['er'] = er

|

||||||

|

g.apply_edges(fn.u_add_v('el', 'er', 'e'))

|

||||||

|

e = self.leaky_relu(g.edata.pop('e'))

|

||||||

|

g.edata['a'] = edge_softmax(g, e) # (E, 1)

|

||||||

|

|

||||||

|

# 消息传递

|

||||||

|

g.update_all(fn.u_mul_e('ft', 'a', 'm'), fn.sum('m', 'ft'))

|

||||||

|

ret = g.dstdata['ft']

|

||||||

|

if self.activation:

|

||||||

|

ret = self.activation(ret)

|

||||||

|

return ret

|

||||||

|

|

||||||

|

|

||||||

|

class Attention(nn.Module):

|

||||||

|

|

||||||

|

def __init__(self, hidden_dim, attn_drop):

|

||||||

|

"""语义层次的注意力

|

||||||

|

|

||||||

|

:param hidden_dim: int 隐含特征维数

|

||||||

|

:param attn_drop: float 注意力dropout

|

||||||

|

"""

|

||||||

|

super().__init__()

|

||||||

|

self.fc = nn.Linear(hidden_dim, hidden_dim)

|

||||||

|

self.attn = nn.Parameter(torch.FloatTensor(1, hidden_dim))

|

||||||

|

self.attn_drop = nn.Dropout(attn_drop)

|

||||||

|

self.reset_parameters()

|

||||||

|

|

||||||

|

def reset_parameters(self):

|

||||||

|

gain = nn.init.calculate_gain('relu')

|

||||||

|

nn.init.xavier_normal_(self.fc.weight, gain)

|

||||||

|

nn.init.xavier_normal_(self.attn, gain)

|

||||||

|

|

||||||

|

def forward(self, h):

|

||||||

|

"""

|

||||||

|

:param h: tensor(N, M, d) 顶点基于不同元路径/类型的嵌入,N为顶点数,M为元路径/类型数

|

||||||

|

:return: tensor(N, d) 顶点的最终嵌入

|

||||||

|

"""

|

||||||

|

attn = self.attn_drop(self.attn)

|

||||||

|

# (N, M, d) -> (M, d) -> (M, 1)

|

||||||

|

w = torch.tanh(self.fc(h)).mean(dim=0).matmul(attn.t())

|

||||||

|

beta = torch.softmax(w, dim=0) # (M, 1)

|

||||||

|

beta = beta.expand((h.shape[0],) + beta.shape) # (N, M, 1)

|

||||||

|

z = (beta * h).sum(dim=1) # (N, d)

|

||||||

|

return z

|

||||||

|

|

||||||

|

|

||||||

|

class NetworkSchemaEncoder(nn.Module):

|

||||||

|

|

||||||

|

def __init__(self, hidden_dim, attn_drop, relations):

|

||||||

|

"""网络结构视图编码器

|

||||||

|

|

||||||

|

:param hidden_dim: int 隐含特征维数

|

||||||

|

:param attn_drop: float 注意力dropout

|

||||||

|

:param relations: List[(str, str, str)] 目标顶点关联的关系列表,长度为邻居类型数S

|

||||||

|

"""

|

||||||

|

super().__init__()

|

||||||

|

self.relations = relations

|

||||||

|

self.dtype = relations[0][2]

|

||||||

|

self.gats = nn.ModuleDict({

|

||||||

|

r[0]: HeCoGATConv(hidden_dim, attn_drop, activation=F.elu)

|

||||||

|

for r in relations

|

||||||

|

})

|

||||||

|

self.attn = Attention(hidden_dim, attn_drop)

|

||||||

|

|

||||||

|

def forward(self, g, feats):

|

||||||

|

"""

|

||||||

|

:param g: DGLGraph 异构图

|

||||||

|

:param feats: Dict[str, tensor(N_i, d)] 顶点类型到输入特征的映射

|

||||||

|

:return: tensor(N_dst, d) 目标顶点的最终嵌入

|

||||||

|

"""

|

||||||

|

feat_dst = feats[self.dtype][:g.num_dst_nodes(self.dtype)]

|

||||||

|

h = []

|

||||||

|

for stype, etype, dtype in self.relations:

|

||||||

|

h.append(self.gats[stype](g[stype, etype, dtype], feats[stype], feat_dst))

|

||||||

|

h = torch.stack(h, dim=1) # (N_dst, S, d)

|

||||||

|

z_sc = self.attn(h) # (N_dst, d)

|

||||||

|

return z_sc

|

||||||

|

|

||||||

|

|

||||||

|

class PositiveGraphEncoder(nn.Module):

|

||||||

|

|

||||||

|

def __init__(self, num_metapaths, in_dim, hidden_dim, attn_drop):

|

||||||

|

"""正样本视图编码器

|

||||||

|

|

||||||

|

:param num_metapaths: int 元路径数量M

|

||||||

|

:param hidden_dim: int 隐含特征维数

|

||||||

|

:param attn_drop: float 注意力dropout

|

||||||

|

"""

|

||||||

|

super().__init__()

|

||||||

|

self.gcns = nn.ModuleList([

|

||||||

|