Initial commit

This commit is contained in:

parent

81a2b1cbd9

commit

fe51ac42df

48

README.md

48

README.md

|

|

@ -1,40 +1,34 @@

|

|||

# scan context

|

||||

# 基于scan context和CNN的重定位研究

|

||||

|

||||

# Scan Context: Egocentric Spatial Descriptor for Place Recognition within 3D Point Cloud Map

|

||||

#1-Day Learning, 1-Year Localization: Long-Term LiDAR Localization Using Scan Context Image

|

||||

|

||||

**2018 IROS Giseop Kim and Ayoung Kim**

|

||||

2019 ICRA

|

||||

|

||||

## Background

|

||||

##SCI localization framework

|

||||

|

||||

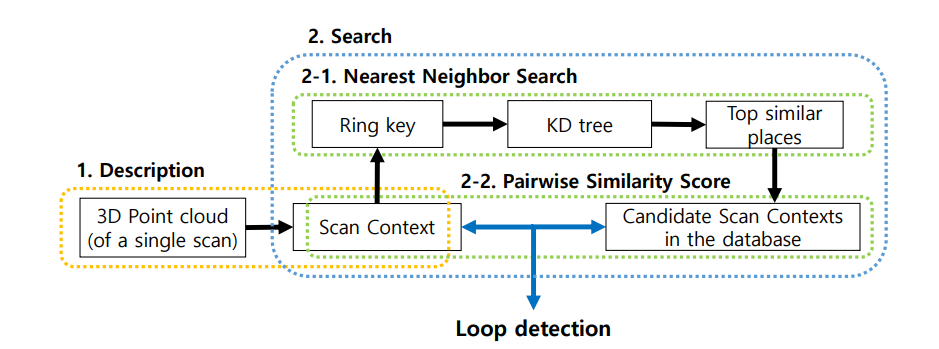

- 回环检测(场景识别)=场景描述+搜索

|

||||

- 3D点云缺乏色彩信息,纹理信息等,无法提取出传统的图像所特有的特征(ORB,SIFT等)

|

||||

- 如果不对点云数据进行预处理的话,就只能进行几何匹配,消耗较高

|

||||

|

||||

|

||||

## challenge

|

||||

##SCI

|

||||

|

||||

- 降维的形式,尽可能多的保留深度信息

|

||||

- 描述符的编码

|

||||

- 相似度打分

|

||||

通过实验验证了与单通道图像训练相比的一点改 进。提出的sci比sc具有更高的分辨能力,是一种更适合cnn输入的格式。这个过程如图所示。我们注意到,进一步研究单色图像或彩色地图选择的网络调谐可以提高定位性能。

|

||||

|

||||

## Framework

|

||||

|

||||

|

||||

|

||||

Matlab使用colormap Jet 可以将灰度图像生成彩色的热度图,灰度值越高,色彩偏向暖色调。相反亦然。

|

||||

|

||||

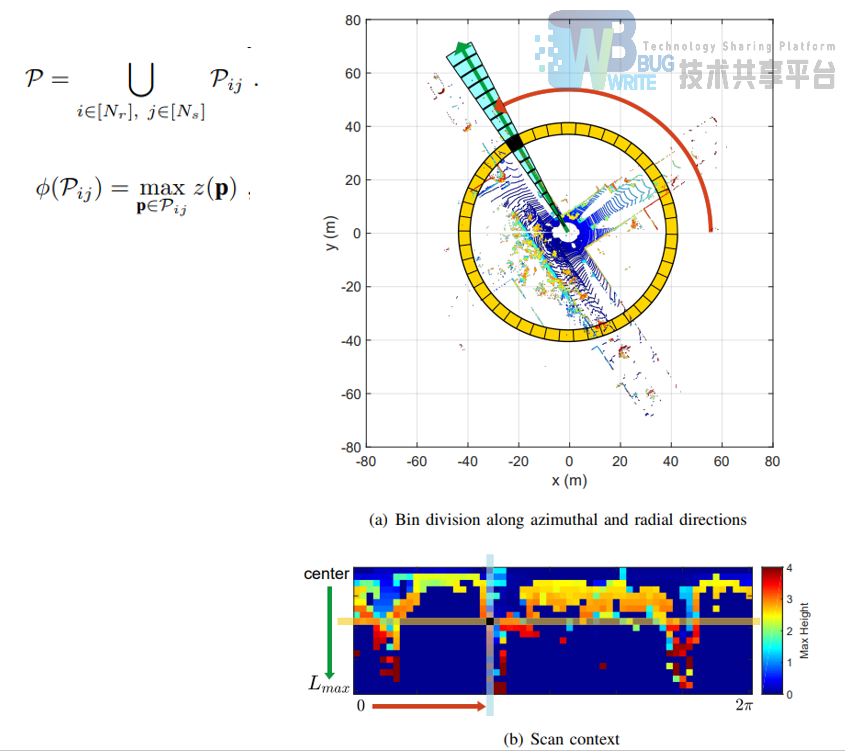

## scan-context

|

||||

|

||||

|

||||

##CNN选择

|

||||

cnn网络

|

||||

|

||||

输入:

|

||||

1.N-way SCI Augmentation

|

||||

2.热编码向量指示类别

|

||||

输出:

|

||||

scorevector

|

||||

|

||||

将点云分为环形的一块一块,每一块的数值就是这一块点云海拔最高值。这样就实现了降维。

|

||||

|

||||

|

||||

|

||||

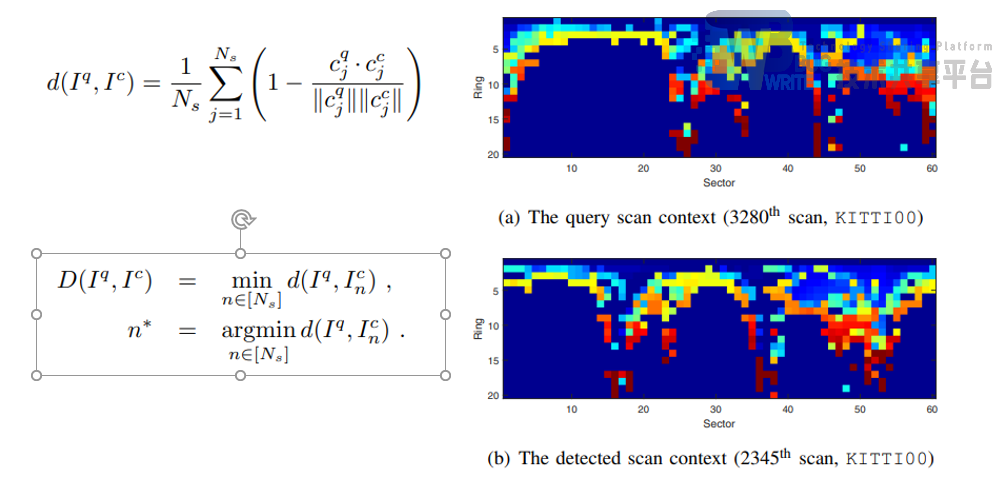

## Similarity Score between Scan Contexts

|

||||

##Unseen place

|

||||

|

||||

由于雷达视角的不同,即当雷达在同一地点纯转动了一定角度之后,列向量向量值不变,但是会出现偏移;行向量的行为是向量中元素的顺序会发生改变,但是行向量不会发生偏移。采用列向比较。

|

||||

|

||||

|

||||

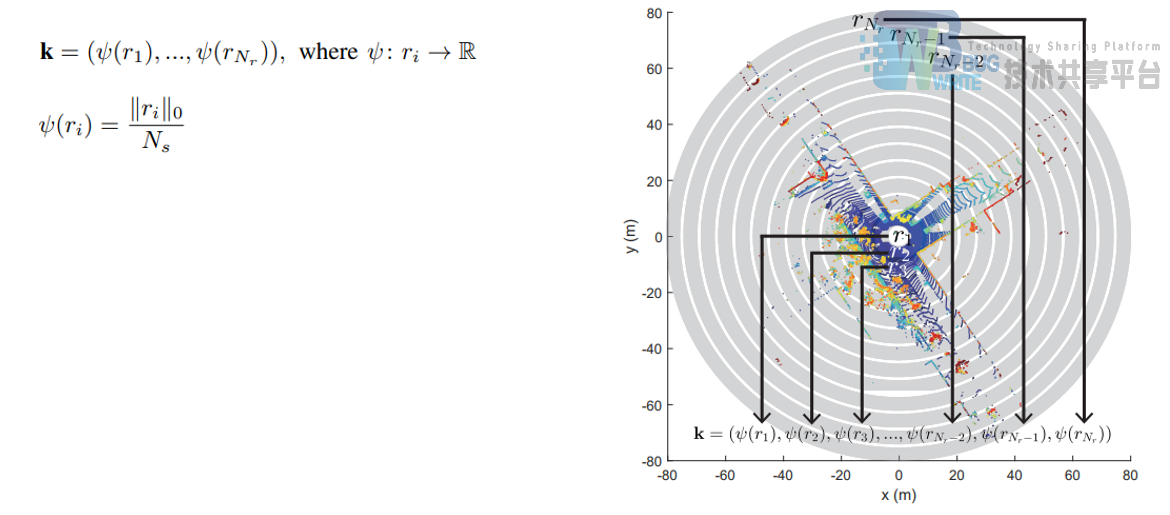

## Two-phase Search Algorithm

|

||||

|

||||

- 利用ring key 构造KD—Tree后最近邻检索

|

||||

|

||||

- 相似度评分

|

||||

|

||||

- 找到闭环对应帧后使用ICP

|

||||

|

||||

|

|

@ -0,0 +1,92 @@

|

|||

<!-- md preview: Show the rendered HTML markdown to the right of the current editor using ctrl-shift-m.-->

|

||||

|

||||

# Scan Context

|

||||

|

||||

## NEWS (Nov, 2020): integrated with LIO-SAM

|

||||

- A Scan Context integration for LIO-SAM, named [SC-LIO-SAM (link)](https://github.com/gisbi-kim/SC-LIO-SAM), is also released.

|

||||

|

||||

## NEWS (Oct, 2020): Radar Scan Context

|

||||

- An evaluation code for radar place recognition (a.k.a. Radar Scan Context) is uploaded.

|

||||

- please see the *fast_evaluator_radar* directory.

|

||||

|

||||

## NEWS (April, 2020): C++ implementation

|

||||

- C++ implementation released!

|

||||

- See the directory `cpp/module/Scancontext`

|

||||

- Features

|

||||

- Light-weight: a single header and cpp file named "Scancontext.h" and "Scancontext.cpp"

|

||||

- Our module has KDtree and we used <a href="https://github.com/jlblancoc/nanoflann"> nanoflann</a>. nanoflann is an also single-header-program and that file is in our directory.

|

||||

- Easy to use: A user just remembers and uses only two API functions; `makeAndSaveScancontextAndKeys` and `detectLoopClosureID`.

|

||||

- Fast: tested the loop detector runs at 10-15Hz (for 20 x 60 size, 10 candidates)

|

||||

- Example: Real-time LiDAR SLAM

|

||||

- We integrated the C++ implementation within the recent popular LiDAR odometry code, <a href="https://github.com/RobustFieldAutonomyLab/LeGO-LOAM"> LeGO-LOAM </a>.

|

||||

- That is, LiDAR SLAM = LiDAR Odometry (LeGO-LOAM) + Loop detection (Scan Context) and closure (GTSAM)

|

||||

- For details, see `cpp/example/lidar_slam` or refer this <a href="https://github.com/irapkaist/SC-LeGO-LOAM"> repository (SC-LeGO-LOAM)</a>.

|

||||

---

|

||||

|

||||

|

||||

- Scan Context is a global descriptor for LiDAR point cloud, which is proposed in this paper and details are easily summarized in this <a href="https://www.youtube.com/watch?v=_etNafgQXoY"> video </a>.

|

||||

|

||||

```

|

||||

@INPROCEEDINGS { gkim-2018-iros,

|

||||

author = {Kim, Giseop and Kim, Ayoung},

|

||||

title = { Scan Context: Egocentric Spatial Descriptor for Place Recognition within {3D} Point Cloud Map },

|

||||

booktitle = { Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems },

|

||||

year = { 2018 },

|

||||

month = { Oct. },

|

||||

address = { Madrid }

|

||||

}

|

||||

```

|

||||

- This point cloud descriptor is used for place retrieval problem such as place

|

||||

recognition and long-term localization.

|

||||

|

||||

|

||||

## What is Scan Context?

|

||||

|

||||

- Scan Context is a global descriptor for LiDAR point cloud, which is especially designed for a sparse and noisy point cloud acquired in outdoor environment.

|

||||

- It encodes egocentric visible information as below:

|

||||

<p align="center"><img src="example/basic/scmaking.gif" width=400></p>

|

||||

|

||||

- A user can vary the resolution of a Scan Context. Below is the example of Scan Contexts' various resolutions for the same point cloud.

|

||||

<p align="center"><img src="example/basic/various_res.png" width=300></p>

|

||||

|

||||

|

||||

## How to use?: example cases

|

||||

- The structure of this repository is composed of 3 example use cases.

|

||||

- Most of the codes are written in Matlab.

|

||||

- A directory _matlab_ contains main functions including Scan Context generation and the distance function.

|

||||

- A directory _example_ contains a full example code for a few applications. We provide a total 3 examples.

|

||||

1. _**basics**_ contains a literally basic codes such as generation and can be a start point to understand Scan Context.

|

||||

|

||||

2. _**place recognition**_ is an example directory for our IROS18 paper. The example is conducted using KITTI sequence 00 and PlaceRecognizer.m is the main code. You can easily grasp the full pipeline of Scan Context-based place recognition via watching and following the PlaceRecognizer.m code. Our Scan Context-based place recognition system consists of two steps; description and search. The search step is then composed of two hierarchical stages (1. ring key-based KD tree for fast candidate proposal, 2. candidate to query pairwise comparison-based nearest search). We note that our coarse yaw aligning-based pairwise distance enables reverse-revisit detection well, unlike others. The pipeline is below.

|

||||

<p align="center"><img src="example/place_recognition/sc_pipeline.png" width=600></p>

|

||||

|

||||

3. _**long-term localization**_ is an example directory for our RAL19 paper. For the separation of mapping and localization, there are separated train and test steps. The main training and test codes are written in python and Keras, only excluding data generation and performance evaluation codes (they are written in Matlab), and those python codes are provided using jupyter notebook. We note that some path may not directly work for your environment but the evaluation codes (e.g., makeDataForPRcurveForSCIresult.m) will help you understand how this classification-based SCI-localization system works. The figure below depicts our long-term localization pipeline. <p align="center"><img src="example/longterm_localization/sci_pipeline.png" width=600></p> More details of our long-term localization pipeline is found in the below paper and we also recommend you to watch this <a href="https://www.youtube.com/watch?v=apmmduXTnaE"> video </a>.

|

||||

```

|

||||

@ARTICLE{ gkim-2019-ral,

|

||||

author = {G. {Kim} and B. {Park} and A. {Kim}},

|

||||

journal = {IEEE Robotics and Automation Letters},

|

||||

title = {1-Day Learning, 1-Year Localization: Long-Term LiDAR Localization Using Scan Context Image},

|

||||

year = {2019},

|

||||

volume = {4},

|

||||

number = {2},

|

||||

pages = {1948-1955},

|

||||

month = {April}

|

||||

}

|

||||

```

|

||||

|

||||

4. _**SLAM**_ directory contains the practical use case of Scan Context for SLAM pipeline. The details are maintained in the related other repository _[PyICP SLAM](https://github.com/kissb2/PyICP-SLAM)_; the full-python LiDAR SLAM codes using Scan Context as a loop detector.

|

||||

|

||||

## Acknowledgment

|

||||

This work is supported by the Korea Agency for Infrastructure Technology Advancement (KAIA) grant funded by the Ministry of Land, Infrastructure and Transport of Korea (19CTAP-C142170-02), and [High-Definition Map Based Precise Vehicle Localization Using Cameras and LIDARs] project funded by NAVER LABS Corporation.

|

||||

|

||||

## Contact

|

||||

If you have any questions, contact here please

|

||||

```

|

||||

paulgkim@kaist.ac.kr

|

||||

```

|

||||

|

||||

## License

|

||||

<a rel="license" href="http://creativecommons.org/licenses/by-nc-sa/4.0/"><img alt="Creative Commons License" style="border-width:0" src="https://i.creativecommons.org/l/by-nc-sa/4.0/88x31.png" /></a><br />This work is licensed under a <a rel="license" href="http://creativecommons.org/licenses/by-nc-sa/4.0/">Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License</a>.

|

||||

|

||||

### Copyright

|

||||

- All codes on this page are copyrighted by KAIST and Naver Labs and published under the Creative Commons Attribution-NonCommercial-ShareAlike 4.0 License. You must attribute the work in the manner specified by the author. You may not use the work for commercial purposes, and you may only distribute the resulting work under the same license if you alter, transform, or create the work.

|

||||

|

|

@ -0,0 +1,2 @@

|

|||

# Go to

|

||||

- https://github.com/irapkaist/SC-LeGO-LOAM

|

||||

|

|

@ -0,0 +1,117 @@

|

|||

/***********************************************************************

|

||||

* Software License Agreement (BSD License)

|

||||

*

|

||||

* Copyright 2011-16 Jose Luis Blanco (joseluisblancoc@gmail.com).

|

||||

* All rights reserved.

|

||||

*

|

||||

* Redistribution and use in source and binary forms, with or without

|

||||

* modification, are permitted provided that the following conditions

|

||||

* are met:

|

||||

*

|

||||

* 1. Redistributions of source code must retain the above copyright

|

||||

* notice, this list of conditions and the following disclaimer.

|

||||

* 2. Redistributions in binary form must reproduce the above copyright

|

||||

* notice, this list of conditions and the following disclaimer in the

|

||||

* documentation and/or other materials provided with the distribution.

|

||||

*

|

||||

* THIS SOFTWARE IS PROVIDED BY THE AUTHOR ``AS IS'' AND ANY EXPRESS OR

|

||||

* IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES

|

||||

* OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE DISCLAIMED.

|

||||

* IN NO EVENT SHALL THE AUTHOR BE LIABLE FOR ANY DIRECT, INDIRECT,

|

||||

* INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES (INCLUDING, BUT

|

||||

* NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; LOSS OF USE,

|

||||

* DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED AND ON ANY

|

||||

* THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY, OR TORT

|

||||

* (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE OF

|

||||

* THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

|

||||

*************************************************************************/

|

||||

|

||||

#pragma once

|

||||

|

||||

#include <nanoflann.hpp>

|

||||

|

||||

#include <vector>

|

||||

|

||||

// ===== This example shows how to use nanoflann with these types of containers: =======

|

||||

//typedef std::vector<std::vector<double> > my_vector_of_vectors_t;

|

||||

//typedef std::vector<Eigen::VectorXd> my_vector_of_vectors_t; // This requires #include <Eigen/Dense>

|

||||

// =====================================================================================

|

||||

|

||||

|

||||

/** A simple vector-of-vectors adaptor for nanoflann, without duplicating the storage.

|

||||

* The i'th vector represents a point in the state space.

|

||||

*

|

||||

* \tparam DIM If set to >0, it specifies a compile-time fixed dimensionality for the points in the data set, allowing more compiler optimizations.

|

||||

* \tparam num_t The type of the point coordinates (typically, double or float).

|

||||

* \tparam Distance The distance metric to use: nanoflann::metric_L1, nanoflann::metric_L2, nanoflann::metric_L2_Simple, etc.

|

||||

* \tparam IndexType The type for indices in the KD-tree index (typically, size_t of int)

|

||||

*/

|

||||

template <class VectorOfVectorsType, typename num_t = double, int DIM = -1, class Distance = nanoflann::metric_L2, typename IndexType = size_t>

|

||||

struct KDTreeVectorOfVectorsAdaptor

|

||||

{

|

||||

typedef KDTreeVectorOfVectorsAdaptor<VectorOfVectorsType,num_t,DIM,Distance> self_t;

|

||||

typedef typename Distance::template traits<num_t,self_t>::distance_t metric_t;

|

||||

typedef nanoflann::KDTreeSingleIndexAdaptor< metric_t,self_t,DIM,IndexType> index_t;

|

||||

|

||||

index_t* index; //! The kd-tree index for the user to call its methods as usual with any other FLANN index.

|

||||

|

||||

/// Constructor: takes a const ref to the vector of vectors object with the data points

|

||||

KDTreeVectorOfVectorsAdaptor(const size_t /* dimensionality */, const VectorOfVectorsType &mat, const int leaf_max_size = 10) : m_data(mat)

|

||||

{

|

||||

assert(mat.size() != 0 && mat[0].size() != 0);

|

||||

const size_t dims = mat[0].size();

|

||||

if (DIM>0 && static_cast<int>(dims) != DIM)

|

||||

throw std::runtime_error("Data set dimensionality does not match the 'DIM' template argument");

|

||||

index = new index_t( static_cast<int>(dims), *this /* adaptor */, nanoflann::KDTreeSingleIndexAdaptorParams(leaf_max_size ) );

|

||||

index->buildIndex();

|

||||

}

|

||||

|

||||

~KDTreeVectorOfVectorsAdaptor() {

|

||||

delete index;

|

||||

}

|

||||

|

||||

const VectorOfVectorsType &m_data;

|

||||

|

||||

/** Query for the \a num_closest closest points to a given point (entered as query_point[0:dim-1]).

|

||||

* Note that this is a short-cut method for index->findNeighbors().

|

||||

* The user can also call index->... methods as desired.

|

||||

* \note nChecks_IGNORED is ignored but kept for compatibility with the original FLANN interface.

|

||||

*/

|

||||

inline void query(const num_t *query_point, const size_t num_closest, IndexType *out_indices, num_t *out_distances_sq, const int nChecks_IGNORED = 10) const

|

||||

{

|

||||

nanoflann::KNNResultSet<num_t,IndexType> resultSet(num_closest);

|

||||

resultSet.init(out_indices, out_distances_sq);

|

||||

index->findNeighbors(resultSet, query_point, nanoflann::SearchParams());

|

||||

}

|

||||

|

||||

/** @name Interface expected by KDTreeSingleIndexAdaptor

|

||||

* @{ */

|

||||

|

||||

const self_t & derived() const {

|

||||

return *this;

|

||||

}

|

||||

self_t & derived() {

|

||||

return *this;

|

||||

}

|

||||

|

||||

// Must return the number of data points

|

||||

inline size_t kdtree_get_point_count() const {

|

||||

return m_data.size();

|

||||

}

|

||||

|

||||

// Returns the dim'th component of the idx'th point in the class:

|

||||

inline num_t kdtree_get_pt(const size_t idx, const size_t dim) const {

|

||||

return m_data[idx][dim];

|

||||

}

|

||||

|

||||

// Optional bounding-box computation: return false to default to a standard bbox computation loop.

|

||||

// Return true if the BBOX was already computed by the class and returned in "bb" so it can be avoided to redo it again.

|

||||

// Look at bb.size() to find out the expected dimensionality (e.g. 2 or 3 for point clouds)

|

||||

template <class BBOX>

|

||||

bool kdtree_get_bbox(BBOX & /*bb*/) const {

|

||||

return false;

|

||||

}

|

||||

|

||||

/** @} */

|

||||

|

||||

}; // end of KDTreeVectorOfVectorsAdaptor

|

||||

|

|

@ -0,0 +1,340 @@

|

|||

#include "Scancontext.h"

|

||||

|

||||

// namespace SC2

|

||||

// {

|

||||

|

||||

void coreImportTest (void)

|

||||

{

|

||||

cout << "scancontext lib is successfully imported." << endl;

|

||||

} // coreImportTest

|

||||

|

||||

|

||||

float rad2deg(float radians)

|

||||

{

|

||||

return radians * 180.0 / M_PI;

|

||||

}

|

||||

|

||||

float deg2rad(float degrees)

|

||||

{

|

||||

return degrees * M_PI / 180.0;

|

||||

}

|

||||

|

||||

|

||||

float xy2theta( const float & _x, const float & _y )

|

||||

{

|

||||

if ( _x >= 0 & _y >= 0)

|

||||

return (180/M_PI) * atan(_y / _x);

|

||||

|

||||

if ( _x < 0 & _y >= 0)

|

||||

return 180 - ( (180/M_PI) * atan(_y / (-_x)) );

|

||||

|

||||

if ( _x < 0 & _y < 0)

|

||||

return 180 + ( (180/M_PI) * atan(_y / _x) );

|

||||

|

||||

if ( _x >= 0 & _y < 0)

|

||||

return 360 - ( (180/M_PI) * atan((-_y) / _x) );

|

||||

} // xy2theta

|

||||

|

||||

|

||||

MatrixXd circshift( MatrixXd &_mat, int _num_shift )

|

||||

{

|

||||

// shift columns to right direction

|

||||

assert(_num_shift >= 0);

|

||||

|

||||

if( _num_shift == 0 )

|

||||

{

|

||||

MatrixXd shifted_mat( _mat );

|

||||

return shifted_mat; // Early return

|

||||

}

|

||||

|

||||

MatrixXd shifted_mat = MatrixXd::Zero( _mat.rows(), _mat.cols() );

|

||||

for ( int col_idx = 0; col_idx < _mat.cols(); col_idx++ )

|

||||

{

|

||||

int new_location = (col_idx + _num_shift) % _mat.cols();

|

||||

shifted_mat.col(new_location) = _mat.col(col_idx);

|

||||

}

|

||||

|

||||

return shifted_mat;

|

||||

|

||||

} // circshift

|

||||

|

||||

|

||||

std::vector<float> eig2stdvec( MatrixXd _eigmat )

|

||||

{

|

||||

std::vector<float> vec( _eigmat.data(), _eigmat.data() + _eigmat.size() );

|

||||

return vec;

|

||||

} // eig2stdvec

|

||||

|

||||

|

||||

double SCManager::distDirectSC ( MatrixXd &_sc1, MatrixXd &_sc2 )

|

||||

{

|

||||

int num_eff_cols = 0; // i.e., to exclude all-nonzero sector

|

||||

double sum_sector_similarity = 0;

|

||||

for ( int col_idx = 0; col_idx < _sc1.cols(); col_idx++ )

|

||||

{

|

||||

VectorXd col_sc1 = _sc1.col(col_idx);

|

||||

VectorXd col_sc2 = _sc2.col(col_idx);

|

||||

|

||||

if( col_sc1.norm() == 0 | col_sc2.norm() == 0 )

|

||||

continue; // don't count this sector pair.

|

||||

|

||||

double sector_similarity = col_sc1.dot(col_sc2) / (col_sc1.norm() * col_sc2.norm());

|

||||

|

||||

sum_sector_similarity = sum_sector_similarity + sector_similarity;

|

||||

num_eff_cols = num_eff_cols + 1;

|

||||

}

|

||||

|

||||

double sc_sim = sum_sector_similarity / num_eff_cols;

|

||||

return 1.0 - sc_sim;

|

||||

|

||||

} // distDirectSC

|

||||

|

||||

|

||||

int SCManager::fastAlignUsingVkey( MatrixXd & _vkey1, MatrixXd & _vkey2)

|

||||

{

|

||||

int argmin_vkey_shift = 0;

|

||||

double min_veky_diff_norm = 10000000;

|

||||

for ( int shift_idx = 0; shift_idx < _vkey1.cols(); shift_idx++ )

|

||||

{

|

||||

MatrixXd vkey2_shifted = circshift(_vkey2, shift_idx);

|

||||

|

||||

MatrixXd vkey_diff = _vkey1 - vkey2_shifted;

|

||||

|

||||

double cur_diff_norm = vkey_diff.norm();

|

||||

if( cur_diff_norm < min_veky_diff_norm )

|

||||

{

|

||||

argmin_vkey_shift = shift_idx;

|

||||

min_veky_diff_norm = cur_diff_norm;

|

||||

}

|

||||

}

|

||||

|

||||

return argmin_vkey_shift;

|

||||

|

||||

} // fastAlignUsingVkey

|

||||

|

||||

|

||||

std::pair<double, int> SCManager::distanceBtnScanContext( MatrixXd &_sc1, MatrixXd &_sc2 )

|

||||

{

|

||||

// 1. fast align using variant key (not in original IROS18)

|

||||

MatrixXd vkey_sc1 = makeSectorkeyFromScancontext( _sc1 );

|

||||

MatrixXd vkey_sc2 = makeSectorkeyFromScancontext( _sc2 );

|

||||

int argmin_vkey_shift = fastAlignUsingVkey( vkey_sc1, vkey_sc2 );

|

||||

|

||||

const int SEARCH_RADIUS = round( 0.5 * SEARCH_RATIO * _sc1.cols() ); // a half of search range

|

||||

std::vector<int> shift_idx_search_space { argmin_vkey_shift };

|

||||

for ( int ii = 1; ii < SEARCH_RADIUS + 1; ii++ )

|

||||

{

|

||||

shift_idx_search_space.push_back( (argmin_vkey_shift + ii + _sc1.cols()) % _sc1.cols() );

|

||||

shift_idx_search_space.push_back( (argmin_vkey_shift - ii + _sc1.cols()) % _sc1.cols() );

|

||||

}

|

||||

std::sort(shift_idx_search_space.begin(), shift_idx_search_space.end());

|

||||

|

||||

// 2. fast columnwise diff

|

||||

int argmin_shift = 0;

|

||||

double min_sc_dist = 10000000;

|

||||

for ( int num_shift: shift_idx_search_space )

|

||||

{

|

||||

MatrixXd sc2_shifted = circshift(_sc2, num_shift);

|

||||

double cur_sc_dist = distDirectSC( _sc1, sc2_shifted );

|

||||

if( cur_sc_dist < min_sc_dist )

|

||||

{

|

||||

argmin_shift = num_shift;

|

||||

min_sc_dist = cur_sc_dist;

|

||||

}

|

||||

}

|

||||

|

||||

return make_pair(min_sc_dist, argmin_shift);

|

||||

|

||||

} // distanceBtnScanContext

|

||||

|

||||

|

||||

MatrixXd SCManager::makeScancontext( pcl::PointCloud<SCPointType> & _scan_down )

|

||||

{

|

||||

TicToc t_making_desc;

|

||||

|

||||

int num_pts_scan_down = _scan_down.points.size();

|

||||

|

||||

// main

|

||||

const int NO_POINT = -1000;

|

||||

MatrixXd desc = NO_POINT * MatrixXd::Ones(PC_NUM_RING, PC_NUM_SECTOR);

|

||||

|

||||

SCPointType pt;

|

||||

float azim_angle, azim_range; // wihtin 2d plane

|

||||

int ring_idx, sctor_idx;

|

||||

for (int pt_idx = 0; pt_idx < num_pts_scan_down; pt_idx++)

|

||||

{

|

||||

pt.x = _scan_down.points[pt_idx].x;

|

||||

pt.y = _scan_down.points[pt_idx].y;

|

||||

pt.z = _scan_down.points[pt_idx].z + LIDAR_HEIGHT; // naive adding is ok (all points should be > 0).

|

||||

|

||||

// xyz to ring, sector

|

||||

azim_range = sqrt(pt.x * pt.x + pt.y * pt.y);

|

||||

azim_angle = xy2theta(pt.x, pt.y);

|

||||

|

||||

// if range is out of roi, pass

|

||||

if( azim_range > PC_MAX_RADIUS )

|

||||

continue;

|

||||

|

||||

ring_idx = std::max( std::min( PC_NUM_RING, int(ceil( (azim_range / PC_MAX_RADIUS) * PC_NUM_RING )) ), 1 );

|

||||

sctor_idx = std::max( std::min( PC_NUM_SECTOR, int(ceil( (azim_angle / 360.0) * PC_NUM_SECTOR )) ), 1 );

|

||||

|

||||

// taking maximum z

|

||||

if ( desc(ring_idx-1, sctor_idx-1) < pt.z ) // -1 means cpp starts from 0

|

||||

desc(ring_idx-1, sctor_idx-1) = pt.z; // update for taking maximum value at that bin

|

||||

}

|

||||

|

||||

// reset no points to zero (for cosine dist later)

|

||||

for ( int row_idx = 0; row_idx < desc.rows(); row_idx++ )

|

||||

for ( int col_idx = 0; col_idx < desc.cols(); col_idx++ )

|

||||

if( desc(row_idx, col_idx) == NO_POINT )

|

||||

desc(row_idx, col_idx) = 0;

|

||||

|

||||

t_making_desc.toc("PolarContext making");

|

||||

|

||||

return desc;

|

||||

} // SCManager::makeScancontext

|

||||

|

||||

|

||||

MatrixXd SCManager::makeRingkeyFromScancontext( Eigen::MatrixXd &_desc )

|

||||

{

|

||||

/*

|

||||

* summary: rowwise mean vector

|

||||

*/

|

||||

Eigen::MatrixXd invariant_key(_desc.rows(), 1);

|

||||

for ( int row_idx = 0; row_idx < _desc.rows(); row_idx++ )

|

||||

{

|

||||

Eigen::MatrixXd curr_row = _desc.row(row_idx);

|

||||

invariant_key(row_idx, 0) = curr_row.mean();

|

||||

}

|

||||

|

||||

return invariant_key;

|

||||

} // SCManager::makeRingkeyFromScancontext

|

||||

|

||||

|

||||

MatrixXd SCManager::makeSectorkeyFromScancontext( Eigen::MatrixXd &_desc )

|

||||

{

|

||||

/*

|

||||

* summary: columnwise mean vector

|

||||

*/

|

||||

Eigen::MatrixXd variant_key(1, _desc.cols());

|

||||

for ( int col_idx = 0; col_idx < _desc.cols(); col_idx++ )

|

||||

{

|

||||

Eigen::MatrixXd curr_col = _desc.col(col_idx);

|

||||

variant_key(0, col_idx) = curr_col.mean();

|

||||

}

|

||||

|

||||

return variant_key;

|

||||

} // SCManager::makeSectorkeyFromScancontext

|

||||

|

||||

|

||||

void SCManager::makeAndSaveScancontextAndKeys( pcl::PointCloud<SCPointType> & _scan_down )

|

||||

{

|

||||

Eigen::MatrixXd sc = makeScancontext(_scan_down); // v1

|

||||

Eigen::MatrixXd ringkey = makeRingkeyFromScancontext( sc );

|

||||

Eigen::MatrixXd sectorkey = makeSectorkeyFromScancontext( sc );

|

||||

std::vector<float> polarcontext_invkey_vec = eig2stdvec( ringkey );

|

||||

|

||||

polarcontexts_.push_back( sc );

|

||||

polarcontext_invkeys_.push_back( ringkey );

|

||||

polarcontext_vkeys_.push_back( sectorkey );

|

||||

polarcontext_invkeys_mat_.push_back( polarcontext_invkey_vec );

|

||||

|

||||

// cout <<polarcontext_vkeys_.size() << endl;

|

||||

|

||||

} // SCManager::makeAndSaveScancontextAndKeys

|

||||

|

||||

|

||||

std::pair<int, float> SCManager::detectLoopClosureID ( void )

|

||||

{

|

||||

int loop_id { -1 }; // init with -1, -1 means no loop (== LeGO-LOAM's variable "closestHistoryFrameID")

|

||||

|

||||

auto curr_key = polarcontext_invkeys_mat_.back(); // current observation (query)

|

||||

auto curr_desc = polarcontexts_.back(); // current observation (query)

|

||||

|

||||

/*

|

||||

* step 1: candidates from ringkey tree_

|

||||

*/

|

||||

if( polarcontext_invkeys_mat_.size() < NUM_EXCLUDE_RECENT + 1)

|

||||

{

|

||||

std::pair<int, float> result {loop_id, 0.0};

|

||||

return result; // Early return

|

||||

}

|

||||

|

||||

// tree_ reconstruction (not mandatory to make everytime)

|

||||

if( tree_making_period_conter % TREE_MAKING_PERIOD_ == 0) // to save computation cost

|

||||

{

|

||||

TicToc t_tree_construction;

|

||||

|

||||

polarcontext_invkeys_to_search_.clear();

|

||||

polarcontext_invkeys_to_search_.assign( polarcontext_invkeys_mat_.begin(), polarcontext_invkeys_mat_.end() - NUM_EXCLUDE_RECENT ) ;

|

||||

|

||||

polarcontext_tree_.reset();

|

||||

polarcontext_tree_ = std::make_unique<InvKeyTree>(PC_NUM_RING /* dim */, polarcontext_invkeys_to_search_, 10 /* max leaf */ );

|

||||

// tree_ptr_->index->buildIndex(); // inernally called in the constructor of InvKeyTree (for detail, refer the nanoflann and KDtreeVectorOfVectorsAdaptor)

|

||||

t_tree_construction.toc("Tree construction");

|

||||

}

|

||||

tree_making_period_conter = tree_making_period_conter + 1;

|

||||

|

||||

double min_dist = 10000000; // init with somthing large

|

||||

int nn_align = 0;

|

||||

int nn_idx = 0;

|

||||

|

||||

// knn search

|

||||

std::vector<size_t> candidate_indexes( NUM_CANDIDATES_FROM_TREE );

|

||||

std::vector<float> out_dists_sqr( NUM_CANDIDATES_FROM_TREE );

|

||||

|

||||

TicToc t_tree_search;

|

||||

nanoflann::KNNResultSet<float> knnsearch_result( NUM_CANDIDATES_FROM_TREE );

|

||||

knnsearch_result.init( &candidate_indexes[0], &out_dists_sqr[0] );

|

||||

polarcontext_tree_->index->findNeighbors( knnsearch_result, &curr_key[0] /* query */, nanoflann::SearchParams(10) );

|

||||

t_tree_search.toc("Tree search");

|

||||

|

||||

/*

|

||||

* step 2: pairwise distance (find optimal columnwise best-fit using cosine distance)

|

||||

*/

|

||||

TicToc t_calc_dist;

|

||||

for ( int candidate_iter_idx = 0; candidate_iter_idx < NUM_CANDIDATES_FROM_TREE; candidate_iter_idx++ )

|

||||

{

|

||||

MatrixXd polarcontext_candidate = polarcontexts_[ candidate_indexes[candidate_iter_idx] ];

|

||||

std::pair<double, int> sc_dist_result = distanceBtnScanContext( curr_desc, polarcontext_candidate );

|

||||

|

||||

double candidate_dist = sc_dist_result.first;

|

||||

int candidate_align = sc_dist_result.second;

|

||||

|

||||

if( candidate_dist < min_dist )

|

||||

{

|

||||

min_dist = candidate_dist;

|

||||

nn_align = candidate_align;

|

||||

|

||||

nn_idx = candidate_indexes[candidate_iter_idx];

|

||||

}

|

||||

}

|

||||

t_calc_dist.toc("Distance calc");

|

||||

|

||||

/*

|

||||

* loop threshold check

|

||||

*/

|

||||

if( min_dist < SC_DIST_THRES )

|

||||

{

|

||||

loop_id = nn_idx;

|

||||

|

||||

// std::cout.precision(3);

|

||||

cout << "[Loop found] Nearest distance: " << min_dist << " btn " << polarcontexts_.size()-1 << " and " << nn_idx << "." << endl;

|

||||

cout << "[Loop found] yaw diff: " << nn_align * PC_UNIT_SECTORANGLE << " deg." << endl;

|

||||

}

|

||||

else

|

||||

{

|

||||

std::cout.precision(3);

|

||||

cout << "[Not loop] Nearest distance: " << min_dist << " btn " << polarcontexts_.size()-1 << " and " << nn_idx << "." << endl;

|

||||

cout << "[Not loop] yaw diff: " << nn_align * PC_UNIT_SECTORANGLE << " deg." << endl;

|

||||

}

|

||||

|

||||

// To do: return also nn_align (i.e., yaw diff)

|

||||

float yaw_diff_rad = deg2rad(nn_align * PC_UNIT_SECTORANGLE);

|

||||

std::pair<int, float> result {loop_id, yaw_diff_rad};

|

||||

|

||||

return result;

|

||||

|

||||

} // SCManager::detectLoopClosureID

|

||||

|

||||

// } // namespace SC2

|

||||

|

|

@ -0,0 +1,110 @@

|

|||

#pragma once

|

||||

|

||||

#include <ctime>

|

||||

#include <cassert>

|

||||

#include <cmath>

|

||||

#include <utility>

|

||||

#include <vector>

|

||||

#include <algorithm>

|

||||

#include <cstdlib>

|

||||

#include <memory>

|

||||

#include <iostream>

|

||||

|

||||

#include <Eigen/Dense>

|

||||

|

||||

#include <opencv2/opencv.hpp>

|

||||

#include <opencv2/core/eigen.hpp>

|

||||

#include <opencv2/highgui/highgui.hpp>

|

||||

#include <cv_bridge/cv_bridge.h>

|

||||

|

||||

#include <pcl/point_cloud.h>

|

||||

#include <pcl/point_types.h>

|

||||

#include <pcl/filters/voxel_grid.h>

|

||||

#include <pcl_conversions/pcl_conversions.h>

|

||||

|

||||

#include "nanoflann.hpp"

|

||||

#include "KDTreeVectorOfVectorsAdaptor.h"

|

||||

|

||||

#include "tictoc.h"

|

||||

|

||||

using namespace Eigen;

|

||||

using namespace nanoflann;

|

||||

|

||||

using std::cout;

|

||||

using std::endl;

|

||||

using std::make_pair;

|

||||

|

||||

using std::atan2;

|

||||

using std::cos;

|

||||

using std::sin;

|

||||

|

||||

using SCPointType = pcl::PointXYZI; // using xyz only. but a user can exchange the original bin encoding function (i.e., max hegiht) to max intensity (for detail, refer 20 ICRA Intensity Scan Context)

|

||||

using KeyMat = std::vector<std::vector<float> >;

|

||||

using InvKeyTree = KDTreeVectorOfVectorsAdaptor< KeyMat, float >;

|

||||

|

||||

|

||||

// namespace SC2

|

||||

// {

|

||||

|

||||

void coreImportTest ( void );

|

||||

|

||||

|

||||

// sc param-independent helper functions

|

||||

float xy2theta( const float & _x, const float & _y );

|

||||

MatrixXd circshift( MatrixXd &_mat, int _num_shift );

|

||||

std::vector<float> eig2stdvec( MatrixXd _eigmat );

|

||||

|

||||

|

||||

class SCManager

|

||||

{

|

||||

public:

|

||||

SCManager( ) = default; // reserving data space (of std::vector) could be considered. but the descriptor is lightweight so don't care.

|

||||

|

||||

Eigen::MatrixXd makeScancontext( pcl::PointCloud<SCPointType> & _scan_down );

|

||||

Eigen::MatrixXd makeRingkeyFromScancontext( Eigen::MatrixXd &_desc );

|

||||

Eigen::MatrixXd makeSectorkeyFromScancontext( Eigen::MatrixXd &_desc );

|

||||

|

||||

int fastAlignUsingVkey ( MatrixXd & _vkey1, MatrixXd & _vkey2 );

|

||||

double distDirectSC ( MatrixXd &_sc1, MatrixXd &_sc2 ); // "d" (eq 5) in the original paper (IROS 18)

|

||||

std::pair<double, int> distanceBtnScanContext ( MatrixXd &_sc1, MatrixXd &_sc2 ); // "D" (eq 6) in the original paper (IROS 18)

|

||||

|

||||

// User-side API

|

||||

void makeAndSaveScancontextAndKeys( pcl::PointCloud<SCPointType> & _scan_down );

|

||||

std::pair<int, float> detectLoopClosureID( void ); // int: nearest node index, float: relative yaw

|

||||

|

||||

public:

|

||||

// hyper parameters ()

|

||||

const double LIDAR_HEIGHT = 2.0; // lidar height : add this for simply directly using lidar scan in the lidar local coord (not robot base coord) / if you use robot-coord-transformed lidar scans, just set this as 0.

|

||||

|

||||

const int PC_NUM_RING = 20; // 20 in the original paper (IROS 18)

|

||||

const int PC_NUM_SECTOR = 60; // 60 in the original paper (IROS 18)

|

||||

const double PC_MAX_RADIUS = 80.0; // 80 meter max in the original paper (IROS 18)

|

||||

const double PC_UNIT_SECTORANGLE = 360.0 / double(PC_NUM_SECTOR);

|

||||

const double PC_UNIT_RINGGAP = PC_MAX_RADIUS / double(PC_NUM_RING);

|

||||

|

||||

// tree

|

||||

const int NUM_EXCLUDE_RECENT = 50; // simply just keyframe gap, but node position distance-based exclusion is ok.

|

||||

const int NUM_CANDIDATES_FROM_TREE = 10; // 10 is enough. (refer the IROS 18 paper)

|

||||

|

||||

// loop thres

|

||||

const double SEARCH_RATIO = 0.1; // for fast comparison, no Brute-force, but search 10 % is okay. // not was in the original conf paper, but improved ver.

|

||||

const double SC_DIST_THRES = 0.13; // empirically 0.1-0.2 is fine (rare false-alarms) for 20x60 polar context (but for 0.15 <, DCS or ICP fit score check (e.g., in LeGO-LOAM) should be required for robustness)

|

||||

// const double SC_DIST_THRES = 0.5; // 0.4-0.6 is good choice for using with robust kernel (e.g., Cauchy, DCS) + icp fitness threshold / if not, recommend 0.1-0.15

|

||||

|

||||

// config

|

||||

const int TREE_MAKING_PERIOD_ = 50; // i.e., remaking tree frequency, to avoid non-mandatory every remaking, to save time cost / if you want to find a very recent revisits use small value of it (it is enough fast ~ 5-50ms wrt N.).

|

||||

int tree_making_period_conter = 0;

|

||||

|

||||

// data

|

||||

std::vector<double> polarcontexts_timestamp_; // optional.

|

||||

std::vector<Eigen::MatrixXd> polarcontexts_;

|

||||

std::vector<Eigen::MatrixXd> polarcontext_invkeys_;

|

||||

std::vector<Eigen::MatrixXd> polarcontext_vkeys_;

|

||||

|

||||

KeyMat polarcontext_invkeys_mat_;

|

||||

KeyMat polarcontext_invkeys_to_search_;

|

||||

std::unique_ptr<InvKeyTree> polarcontext_tree_;

|

||||

|

||||

}; // SCManager

|

||||

|

||||

// } // namespace SC2

|

||||

File diff suppressed because it is too large

Load Diff

|

|

@ -0,0 +1,47 @@

|

|||

// Author: Tong Qin qintonguav@gmail.com

|

||||

// Shaozu Cao saozu.cao@connect.ust.hk

|

||||

|

||||

#pragma once

|

||||

|

||||

#include <ctime>

|

||||

#include <iostream>

|

||||

#include <string>

|

||||

#include <cstdlib>

|

||||

#include <chrono>

|

||||

|

||||

class TicToc

|

||||

{

|

||||

public:

|

||||

TicToc()

|

||||

{

|

||||

tic();

|

||||

}

|

||||

|

||||

TicToc( bool _disp )

|

||||

{

|

||||

disp_ = _disp;

|

||||

tic();

|

||||

}

|

||||

|

||||

void tic()

|

||||

{

|

||||

start = std::chrono::system_clock::now();

|

||||

}

|

||||

|

||||

void toc( std::string _about_task )

|

||||

{

|

||||

end = std::chrono::system_clock::now();

|

||||

std::chrono::duration<double> elapsed_seconds = end - start;

|

||||

double elapsed_ms = elapsed_seconds.count() * 1000;

|

||||

|

||||

if( disp_ )

|

||||

{

|

||||

std::cout.precision(3); // 10 for sec, 3 for ms

|

||||

std::cout << _about_task << ": " << elapsed_ms << " msec." << std::endl;

|

||||

}

|

||||

}

|

||||

|

||||

private:

|

||||

std::chrono::time_point<std::chrono::system_clock> start, end;

|

||||

bool disp_ = false;

|

||||

};

|

||||

|

|

@ -0,0 +1,2 @@

|

|||

# Scan Context for LiDAR SLAM

|

||||

- Go to [PyICP SLAM](https://github.com/kissb2/PyICP-SLAM)

|

||||

|

|

@ -0,0 +1,81 @@

|

|||

clear; clc;

|

||||

addpath(genpath('../../matlab/'));

|

||||

|

||||

|

||||

%% Parameters

|

||||

data_dir = '../../sample_data/KITTI/00/velodyne/';

|

||||

|

||||

basic_max_range = 80; % meter

|

||||

basic_num_sectors = 60;

|

||||

basic_num_rings = 20;

|

||||

|

||||

|

||||

%% Visualization of ScanContext

|

||||

bin_path = [data_dir, '000094.bin'];

|

||||

ptcloud = KITTIbin2Ptcloud(bin_path);

|

||||

sc = Ptcloud2ScanContext(ptcloud, basic_num_sectors, basic_num_rings, basic_max_range);

|

||||

|

||||

h1 = figure(1); clf;

|

||||

imagesc(sc);

|

||||

set(gcf, 'Position', [10 10 800 300]);

|

||||

xlabel('sector'); ylabel('ring');

|

||||

|

||||

% for vivid visualization

|

||||

colormap jet;

|

||||

caxis([0, 4]); % KITTI00 is usually in z: [0, 4]

|

||||

|

||||

|

||||

%% Making Ringkey and maintaining kd-tree

|

||||

% Read the PlaceRecognizer.m

|

||||

|

||||

|

||||

%% ScanContext with different resolution

|

||||

|

||||

figure(2); clf;

|

||||

pcshow(ptcloud); colormap jet; caxis([0 4]);

|

||||

|

||||

res = [0.25, 0.5, 1, 2, 3];

|

||||

|

||||

h2=figure(3); clf;

|

||||

set(gcf, 'Position', [10 10 500 1000]);

|

||||

|

||||

for i = 1:length(res)

|

||||

num_sectors = basic_num_sectors * res(i);

|

||||

num_rings = basic_num_rings * res(i);

|

||||

|

||||

sc = Ptcloud2ScanContext(ptcloud, num_sectors, num_rings, basic_max_range);

|

||||

|

||||

subplot(length(res), 1, i);

|

||||

imagesc(sc); hold on;

|

||||

colormap jet;

|

||||

caxis([0, 4]); % KITTI00 is usually in z: [0, 4]

|

||||

end

|

||||

|

||||

|

||||

|

||||

%% Comparison btn two scan contexts

|

||||

|

||||

KITTI_bin1a_path = [data_dir, '000094.bin'];

|

||||

KITTI_bin1b_path = [data_dir, '000095.bin'];

|

||||

KITTI_bin2a_path = [data_dir, '000198.bin'];

|

||||

KITTI_bin2b_path = [data_dir, '000199.bin'];

|

||||

|

||||

ptcloud_KITTI1a = KITTIbin2Ptcloud(KITTI_bin1a_path);

|

||||

ptcloud_KITTI1b = KITTIbin2Ptcloud(KITTI_bin1b_path);

|

||||

ptcloud_KITTI2a = KITTIbin2Ptcloud(KITTI_bin2a_path);

|

||||

ptcloud_KITTI2b = KITTIbin2Ptcloud(KITTI_bin2b_path);

|

||||

|

||||

sc_KITTI1a = Ptcloud2ScanContext(ptcloud_KITTI1a, basic_num_sectors, basic_num_rings, basic_max_range);

|

||||

sc_KITTI1b = Ptcloud2ScanContext(ptcloud_KITTI1b, basic_num_sectors, basic_num_rings, basic_max_range);

|

||||

sc_KITTI2a = Ptcloud2ScanContext(ptcloud_KITTI2a, basic_num_sectors, basic_num_rings, basic_max_range);

|

||||

sc_KITTI2b = Ptcloud2ScanContext(ptcloud_KITTI2b, basic_num_sectors, basic_num_rings, basic_max_range);

|

||||

|

||||

dist_1a_1b = DistanceBtnScanContexts(sc_KITTI1a, sc_KITTI1b);

|

||||

dist_1b_2a = DistanceBtnScanContexts(sc_KITTI1b, sc_KITTI2a);

|

||||

dist_1a_2a = DistanceBtnScanContexts(sc_KITTI1a, sc_KITTI2a);

|

||||

dist_2a_2b = DistanceBtnScanContexts(sc_KITTI2a, sc_KITTI2b);

|

||||

|

||||

disp([dist_1a_1b, dist_1b_2a, dist_1a_2a, dist_2a_2b]);

|

||||

|

||||

|

||||

|

||||

Binary file not shown.

|

After Width: | Height: | Size: 108 KiB |

Binary file not shown.

|

After Width: | Height: | Size: 2.0 MiB |

Binary file not shown.

|

After Width: | Height: | Size: 25 KiB |

|

|

@ -0,0 +1,112 @@

|

|||

%% information

|

||||

% main for Sampling places

|

||||

|

||||

%%

|

||||

clear; clc;

|

||||

addpath(genpath('../../../../../matlab/'));

|

||||

addpath(genpath('./helper'));

|

||||

|

||||

SaveDirectoryList

|

||||

Parameters

|

||||

|

||||

%% Preparation 1: make pre-determined Grid Cell index

|

||||

PlaceIndexAndGridCenters_10m = makeGridCellIndex(xRange, yRange, 10);

|

||||

|

||||

%% Preparation 2: get scan times

|

||||

SequenceDate = '2012-01-15'; % ### Change this part to your date

|

||||

ScanBaseDir = 'F:\NCLT/'; % ### Change this part to your path

|

||||

|

||||

ScanDir = strcat(ScanBaseDir, SequenceDate, '/velodyne_sync/');

|

||||

Scans = dir(ScanDir); Scans(1:2, :) = []; Scans = {Scans(:).name};

|

||||

ScanTimes = getNCLTscanInformation(Scans);

|

||||

|

||||

%% Preparation 3: load GT pose (for calc moving diff and location)

|

||||

GroundTruthPosePath = strcat(ScanBaseDir, SequenceDate, '/groundtruth_', SequenceDate, '.csv');

|

||||

GroundTruthPoseData = csvread(GroundTruthPosePath);

|

||||

|

||||

GroundTruthPoseTime = GroundTruthPoseData(:, 1);

|

||||

GroundTruthPoseXYZ = GroundTruthPoseData(:, 2:4);

|

||||

|

||||

nGroundTruthPoses = length(GroundTruthPoseData);

|

||||

|

||||

%% logger

|

||||

TrajectoryInformationWRT10mCell = [];

|

||||

|

||||

nTotalSampledPlaces = 0;

|

||||

|

||||

%% Main: Sampling

|

||||

|

||||

MoveCounter = 0; % Reset 0 again for every SamplingGap reached.

|

||||

for ii = 1000:nGroundTruthPoses % just quite large number 1000 for avoiding first several NaNs

|

||||

curTime = GroundTruthPoseTime(ii, 1);

|

||||

|

||||

prvPose = GroundTruthPoseXYZ(ii-1, :);

|

||||

curPose = GroundTruthPoseXYZ(ii, :);

|

||||

|

||||

curMove = norm(curPose - prvPose);

|

||||

MoveCounter = MoveCounter + curMove;

|

||||

|

||||

if(MoveCounter >= SamplingGap)

|

||||

nTotalSampledPlaces = nTotalSampledPlaces + 1;

|

||||

curSamplingCounter = nTotalSampledPlaces;

|

||||

|

||||

% Returns the index of the cell, where the current pose is closest to the cell's center coordinates.

|

||||

PlaceIdx_10m = getPlaceIdx(curPose, PlaceIndexAndGridCenters_10m); % 2nd argument is cell's size

|

||||

|

||||

% load current point cloud

|

||||

curPtcloud = getNearestPtcloud( ScanTimes, curTime, Scans, ScanDir);

|

||||

|

||||

%% Save data

|

||||

% log

|

||||

TrajectoryInformationWRT10mCell = [TrajectoryInformationWRT10mCell; curTime, curPose, nTotalSampledPlaces, PlaceIdx_10m];

|

||||

|

||||

% scan context

|

||||

ScanContextForward = Ptcloud2ScanContext(curPtcloud, nSectors, nRings, Lmax);

|

||||

|

||||

% SCI gray (1 channel)

|

||||

ScanContextForwardRanged = ScaleSc2Img(ScanContextForward, NCLTminHeight, NCLTmaxHeight);

|

||||

ScanContextForwardScaled = ScanContextForwardRanged./maxColor;

|

||||

|

||||

SCIforwardGray = round(ScanContextForwardScaled*255);

|

||||

SCIforwardGray = ind2gray(SCIforwardGray, gray(255));

|

||||

|

||||

% SCI jet (color, 3 channel)

|

||||

SCIforwardColor = round(ScanContextForwardScaled*255);

|

||||

SCIforwardColor = ind2rgb(SCIforwardColor, jet(255));

|

||||

|

||||

saveSCIcolor(SCIforwardColor, DIR_SCIcolor, curSamplingCounter, PlaceIdx_10m, '10', 'f');

|

||||

|

||||

% SCI jet + Backward dataAug

|

||||

ScanContextBackwardScaled = circshift(ScanContextForwardScaled, nSectors/2, 2);

|

||||

SCIbackwardColor = round(ScanContextBackwardScaled*255);

|

||||

SCIbackwardColor = ind2rgb(SCIbackwardColor, jet(255));

|

||||

|

||||

saveSCIcolor(SCIforwardColor, DIR_SCIcolorAlsoBack, curSamplingCounter, PlaceIdx_10m, '10', 'f');

|

||||

saveSCIcolor(SCIbackwardColor, DIR_SCIcolorAlsoBack, curSamplingCounter, PlaceIdx_10m, '10', 'b');

|

||||

|

||||

% End: Reset counter

|

||||

MoveCounter = 0;

|

||||

|

||||

% Tracking progress message

|

||||

if(rem(curSamplingCounter, 100) == 0)

|

||||

message = strcat(num2str(curSamplingCounter), "th sample is saved." );

|

||||

disp(message)

|

||||

end

|

||||

|

||||

end

|

||||

|

||||

end

|

||||

|

||||

|

||||

%% save Trajectory Information

|

||||

% 10m

|

||||

filepath = strcat(DIR_SampledPlacesInformation, '/TrajectoryInformation.csv');

|

||||

TrajectoryInformation = TrajectoryInformationWRT10mCell;

|

||||

dlmwrite(filepath, TrajectoryInformation, 'precision','%.6f')

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

|

@ -0,0 +1,29 @@

|

|||

% flag for train/test

|

||||

IF_TRAINING = 1;

|

||||

|

||||

% sampling gap

|

||||

SamplingGap = 1; % in meter

|

||||

|

||||

% place resolution

|

||||

PlaceCellSize = 10; % in meter

|

||||

|

||||

% NCLT region

|

||||

xRange = [-350, 130];

|

||||

yRange = [-730, 120];

|

||||

|

||||

|

||||

% scan context

|

||||

nRings = 40;

|

||||

nSectors = 120;

|

||||

Lmax = 80;

|

||||

|

||||

% scan context image

|

||||

NCLTminHeight = 0;

|

||||

NCLTmaxHeight = 15;

|

||||

|

||||

SCI_HEIGHT_RANGE = [NCLTminHeight, NCLTmaxHeight];

|

||||

|

||||

minColor = 0;

|

||||

maxColor = 255;

|

||||

rangeColor = maxColor - minColor;

|

||||

|

||||

|

|

@ -0,0 +1,6 @@

|

|||

|

||||

%% Save Directories

|

||||

|

||||

DIR_SampledPlacesInformation = './data/SampledPlacesInformation/';

|

||||

DIR_SCIcolor = './data/SCI_jet0to15/';

|

||||

DIR_SCIcolorAlsoBack = './data/SCI_jet0to15_BackAug/';

|

||||

|

|

@ -0,0 +1,33 @@

|

|||

function [ scScaled ] = ScaleSc2Img( scOriginal, minHeight, maxHeight )

|

||||

|

||||

maxColor = 255;

|

||||

|

||||

rangeHeight = maxHeight - minHeight;

|

||||

|

||||

nRows = size(scOriginal, 1);

|

||||

nCols = size(scOriginal, 2);

|

||||

|

||||

scOriginalRangeCut = scOriginal;

|

||||

% cut into range

|

||||

for ithRow = 1:nRows

|

||||

for jthCol = 1:nCols

|

||||

|

||||

ithPixel = scOriginal(ithRow, jthCol);

|

||||

|

||||

if(ithPixel >= maxHeight)

|

||||

scOriginalRangeCut(ithRow, jthCol) = maxHeight;

|

||||

end

|

||||

|

||||

if(ithPixel <= minHeight)

|

||||

scOriginalRangeCut(ithRow, jthCol) = minHeight;

|

||||

end

|

||||

|

||||

scOriginalRangeCut(ithRow, jthCol) = round(scOriginalRangeCut(ithRow, jthCol) * (maxColor/rangeHeight));

|

||||

end

|

||||

end

|

||||

|

||||

scScaled = scOriginalRangeCut;

|

||||

|

||||

|

||||

end

|

||||

|

||||

|

|

@ -0,0 +1,15 @@

|

|||

function [ ScanTimes ] = getNCLTscanInformation( Scans )

|

||||

|

||||

nScans = length(Scans);

|

||||

|

||||

TIME_LENGTH = 16; % Dont Change this

|

||||

ScanTimes = zeros(nScans, 1);

|

||||

for i=1:nScans

|

||||

ithScanName = Scans{i};

|

||||

ithScanTime = str2double(ithScanName(1:TIME_LENGTH));

|

||||

ScanTimes(i) = ithScanTime;

|

||||

end

|

||||

|

||||

|

||||

end

|

||||

|

||||

|

|

@ -0,0 +1,9 @@

|

|||

function [ Ptcloud ] = getNearestPtcloud( ScanTimes, curTime, Scans, ScanDir)

|

||||

|

||||

[MinTimeDelta, ArgminIdx] = min(abs(ScanTimes-curTime));

|

||||

ArgminBinName = Scans{ArgminIdx};

|

||||

ArgminBinPath = strcat(ScanDir, ArgminBinName);

|

||||

Ptcloud = NCLTbin2Ptcloud(ArgminBinPath);

|

||||

|

||||

end

|

||||

|

||||

|

|

@ -0,0 +1,23 @@

|

|||

function [ PlaceIdx ] = getPlaceIdx(curPose, PlaceIndexAndGridCenters)

|

||||

|

||||

%% load meta file

|

||||

|

||||

%% Main

|

||||

curX = curPose(1);

|

||||

curY = curPose(2);

|

||||

|

||||

PlaceCellCenters = PlaceIndexAndGridCenters(:, 2:3);

|

||||

nPlaces = length(PlaceCellCenters);

|

||||

|

||||

Dists = zeros(nPlaces, 1);

|

||||

for ii=1:nPlaces

|

||||

Dist = norm(PlaceCellCenters(ii, :) - [curX, curY]);

|

||||

Dists(ii) = Dist;

|

||||

end

|

||||

|

||||

[NearestDist, NearestIdx] = min(Dists);

|

||||

|

||||

PlaceIdx = NearestIdx;

|

||||

|

||||

end

|

||||

|

||||

|

|

@ -0,0 +1,17 @@

|

|||

function [ theta ] = getThetaFromXY( x, y )

|

||||

|

||||

if (x >= 0 && y >= 0)

|

||||

theta = 180/pi * atan(y/x);

|

||||

end

|

||||

if (x < 0 && y >= 0)

|

||||

theta = 180 - ((180/pi) * atan(y/(-x)));

|

||||

end

|

||||

if (x < 0 && y < 0)

|

||||

theta = 180 + ((180/pi) * atan(y/x));

|

||||

end

|

||||

if ( x >= 0 && y < 0)

|

||||

theta = 360 - ((180/pi) * atan((-y)/x));

|

||||

end

|

||||

|

||||

end

|

||||

|

||||

|

|

@ -0,0 +1,28 @@

|

|||

function [ PlaceIndexAndGridCenters ] = makeGridCellIndex ( xRange, yRange, PlaceCellSize )

|

||||

|

||||

xSize = xRange(2) - xRange(1);

|

||||

ySize = yRange(2) - yRange(1);

|

||||

|

||||

nGridX = round(xSize/PlaceCellSize);

|

||||

nGridY = round(ySize/PlaceCellSize);

|

||||

|

||||

xGridBoundaries = linspace(xRange(1), xRange(2), nGridX+1);

|

||||

yGridBoundaries = linspace(yRange(1), yRange(2), nGridY+1);

|

||||

|

||||

nTotalIndex = nGridX * nGridY;

|

||||

|

||||

curAssignedIndex = 1;

|

||||

PlaceIndexAndGridCenters = zeros(nTotalIndex, 3);

|

||||

for ii=1:nGridX

|

||||

xGridCenter = (xGridBoundaries(ii+1) + xGridBoundaries(ii))/2;

|

||||

for jj=1:nGridY

|

||||

yGridCenter = (yGridBoundaries(jj+1) + yGridBoundaries(jj))/2;

|

||||

|

||||

PlaceIndexAndGridCenters(curAssignedIndex, :) = [curAssignedIndex, xGridCenter, yGridCenter];

|

||||

curAssignedIndex = curAssignedIndex + 1;

|

||||

end

|

||||

|

||||

end

|

||||

|

||||

end

|

||||

|

||||

|

|

@ -0,0 +1,22 @@

|

|||

function [ ] = saveSCIcolor(curSCIcolor, DIR_SCIcolor, SamplingCounter, PlaceIdx, CellSize, ForB)

|

||||

|

||||

SCIcolor = curSCIcolor;

|

||||

|

||||

curSamplingCounter = num2str(SamplingCounter,'%0#6.f');

|

||||

curSamplingCounter = curSamplingCounter(1:end-1);

|

||||

|

||||

curPlaceIdx = num2str(PlaceIdx,'%0#6.f');

|

||||

curPlaceIdx = curPlaceIdx(1:end-1);

|

||||

|

||||

saveName = strcat(curSamplingCounter, '_', curPlaceIdx);

|

||||

saveDir = strcat(DIR_SCIcolor, CellSize, '/');

|

||||

if( ~exist(saveDir))

|

||||

mkdir(saveDir)

|

||||

end

|

||||

|

||||

savePath = strcat(saveDir, saveName, ForB, '.png');

|

||||

|

||||

imwrite(SCIcolor, savePath);

|

||||

|

||||

end

|

||||

|

||||

|

|

@ -0,0 +1,302 @@

|

|||

{

|

||||

"cells": [

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": 9,

|

||||

"metadata": {},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"import os\n",

|

||||

"import numpy as np \n",

|

||||

"import pandas as pd \n",

|

||||

"import tensorflow as tf\n",

|

||||

"import keras\n",

|

||||

"import matplotlib.pyplot as plt"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": 115,

|

||||

"metadata": {},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"# Data info \n",

|

||||

"rootDir = '..your_data_path/'\n",

|

||||

"\n",

|

||||

"Dataset = 'NCLT'\n",

|

||||

"TrainOrTest = '/Train/'\n",

|

||||

"SequenceDate = '2012-01-15'\n",

|

||||

"\n",

|

||||

"SCImiddlePath = '/5. SCI_jet0to15_BackAug/'\n",

|

||||

"\n",

|

||||

"GridCellSize = '10'"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": 116,

|

||||

"metadata": {},

|

||||

"outputs": [

|

||||

{

|

||||

"name": "stdout",

|

||||

"output_type": "stream",

|

||||

"text": [

|

||||

"/media/gskim/IRAP-ADV1/Data/ICRA2019/NCLT/Train/2012-01-15/5. SCI_jet0to15_BackAug/10/\n"

|

||||

]

|

||||

}

|

||||

],

|

||||

"source": [

|

||||

"DataPath = rootDir + Dataset + TrainOrTest + SequenceDate + SCImiddlePath + GridCellSize + '/'\n",

|

||||

"print(DataPath)"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": 181,

|

||||

"metadata": {},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"def getTrainingDataNCLT(DataPath, SequenceDate): \n",

|

||||

"\n",

|

||||

" # info\n",

|

||||

" WholeData = os.listdir(DataPath)\n",

|

||||

" nWholeData = len(WholeData)\n",

|

||||

" print(str(nWholeData) + ' data exist in ' + SequenceDate)\n",

|

||||

" \n",

|

||||

" # read \n",

|

||||

" X = []\n",

|

||||

" y = []\n",

|

||||

" for ii in range(nWholeData):\n",

|

||||

" dataName = WholeData[ii]\n",

|

||||

" dataPath = DataPath + dataName\n",

|

||||

" \n",

|

||||

" dataTrajNodeOrder = int(dataName[0:5])\n",

|

||||

"\n",

|

||||

" SCI = plt.imread(dataPath)\n",

|

||||

" dataPlaceIndex = int(dataName[6:11])\n",

|

||||

" \n",

|

||||

" X.append(SCI)\n",

|

||||

" y.append(dataPlaceIndex)\n",

|

||||

" \n",

|

||||

" # progress message \n",

|

||||

" if ii%1000==0:\n",

|

||||

" print(str(format((ii/nWholeData)*100, '.1f')), '% loaded.')\n",

|

||||

" \n",

|

||||

" \n",

|

||||

" dataShape = SCI.shape\n",

|

||||

" \n",

|

||||

" # X\n",

|

||||

" X_nd = np.zeros(shape=(nWholeData, dataShape[0], dataShape[1], dataShape[2]))\n",

|

||||

" for jj in range(len(X)):\n",

|

||||

" X_nd[jj, :, :] = X[jj]\n",

|

||||

" X_nd = X_nd.astype('float32')\n",

|

||||

" \n",

|

||||

" # y (one-hot encoded)\n",

|

||||

" from sklearn.preprocessing import LabelEncoder\n",

|

||||

" lbl_enc = LabelEncoder()\n",

|

||||

" lbl_enc.fit(y)\n",

|

||||

" \n",

|

||||

" ClassesTheSequenceHave = lbl_enc.classes_\n",

|

||||

" nClassesTheSequenceHave = len(ClassesTheSequenceHave)\n",

|

||||

" \n",

|

||||

" y = lbl_enc.transform(y)\n",

|

||||

" y_nd = keras.utils.np_utils.to_categorical(y, num_classes=nClassesTheSequenceHave)\n",

|

||||

"\n",

|

||||

" # log message \n",

|

||||

" print('Data size: %s' % nWholeData)\n",

|

||||

" print(' ')\n",

|

||||

" print('Data shape:', X_nd.shape)\n",

|

||||

" print('Label shape:', y_nd.shape)\n",

|

||||

" \n",

|

||||

" return X_nd, y_nd, lbl_enc\n",

|

||||

" "

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": 182,

|

||||

"metadata": {

|

||||

"scrolled": false

|

||||

},

|

||||

"outputs": [

|

||||

{

|

||||

"name": "stdout",

|

||||

"output_type": "stream",

|

||||

"text": [

|

||||

"15078 data exist in 2012-01-15\n",

|

||||

"0.0 % loaded.\n",

|

||||

"6.6 % loaded.\n",

|

||||

"13.3 % loaded.\n",

|

||||

"19.9 % loaded.\n",

|

||||

"26.5 % loaded.\n",

|

||||

"33.2 % loaded.\n",

|

||||

"39.8 % loaded.\n",

|

||||

"46.4 % loaded.\n",

|

||||

"53.1 % loaded.\n",

|

||||

"59.7 % loaded.\n",

|

||||

"66.3 % loaded.\n",

|

||||

"73.0 % loaded.\n",

|

||||

"79.6 % loaded.\n",

|

||||

"86.2 % loaded.\n",

|

||||

"92.9 % loaded.\n",

|

||||

"99.5 % loaded.\n",

|

||||

"Data size: 15078\n",

|

||||

"Data shape: (15078, 40, 120, 3)\n",

|

||||

"Label shape: (15078, 579)\n"

|

||||

]

|

||||

}

|

||||

],

|

||||

"source": [

|

||||

"[X, y, lbl_enc] = getTrainingDataNCLT(DataPath, SequenceDate)"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": 172,

|

||||

"metadata": {},

|

||||

"outputs": [

|

||||

{

|

||||

"name": "stdout",

|

||||

"output_type": "stream",

|

||||

"text": [

|

||||

"(40, 120, 3)\n",

|

||||

"(579,)\n"

|

||||

]

|

||||

}

|

||||

],

|

||||

"source": [

|

||||

"dataShape = X[0].shape\n",

|

||||

"labelShape = y[0].shape\n",

|

||||

"\n",

|

||||

"print(dataShape)\n",

|

||||

"print(labelShape)"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": 173,

|

||||

"metadata": {},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"# Model \n",

|

||||

"from keras import backend as K\n",

|

||||

"K.clear_session()\n",

|

||||

"\n",

|

||||

"ModelName = 'my_model'\n",

|

||||

"\n",

|

||||

"Drop1 = 0.7\n",

|

||||

"Drop2 = 0.7\n",

|

||||

"\n",

|

||||

"KernelSize = 5\n",

|

||||

"\n",

|

||||

"nConv1Filter = 64\n",

|

||||

"nConv2Filter = 128\n",

|

||||

"nConv3Filter = 256\n",

|

||||

"\n",

|

||||

"nFCN1 = 64\n",

|

||||

"\n",

|

||||

"inputs = keras.layers.Input(shape=(dataShape[0], dataShape[1], dataShape[2]))\n",

|

||||

"x = keras.layers.Conv2D(filters=nConv1Filter, kernel_size=KernelSize, activation='relu', padding='same')(inputs)\n",

|

||||

"x = keras.layers.MaxPooling2D(pool_size=(2, 2), strides=None, padding='valid')(x)\n",

|

||||

"x = keras.layers.BatchNormalization()(x)\n",

|

||||

"x = keras.layers.Conv2D(filters=nConv2Filter, kernel_size=KernelSize, activation='relu', padding='same')(x)\n",

|

||||

"x = keras.layers.MaxPool2D()(x)\n",

|

||||

"x = keras.layers.BatchNormalization()(x)\n",

|

||||

"x = keras.layers.Conv2D(filters=nConv3Filter, kernel_size=KernelSize, activation='relu', padding='same')(x)\n",

|

||||

"x = keras.layers.MaxPool2D()(x)\n",

|

||||

"x = keras.layers.Flatten()(x)\n",

|

||||

"x = keras.layers.Dropout(rate=Drop1)(x)\n",

|

||||

"x = keras.layers.Dense(units=nFCN1)(x)\n",

|

||||

"x = keras.layers.Dropout(rate=Drop2)(x)\n",

|

||||

"outputs = keras.layers.Dense(units=labelShape[0], activation='softmax')(x)\n",

|

||||

"\n",

|

||||

"model = keras.models.Model(inputs=inputs, outputs=outputs)"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": 174,

|

||||

"metadata": {},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"# Model Compile \n",

|

||||

"model.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['acc'])\n",

|

||||

"model.build(None,)"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": 1,

|

||||

"metadata": {

|

||||

"scrolled": true

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"#Train\n",

|

||||

"X_train = X\n",

|

||||

"y_train = y\n",

|

||||

"\n",

|

||||

"nEpoch = 200\n",

|

||||

"\n",

|

||||

"model.fit(X_train,\n",

|

||||